通過閱讀本文,您可以快速了解 iOS 遠程雙錄的相關功能及調用方法。

遠程雙錄功能同時使用了 MPIDRSSDK AI 原子能力和音視頻通話組件( Mobile Real-Time Communication,簡稱 MRTC)。在雙錄過程中 MPIDRSSDK 通過 MRTC 拿到音視頻數據進行智能檢測,MRTC 將 MPIDRSSDK 合成的音頻數據推流到音視頻通話房間內。

iOS 接入

創建項目工程

使用 xcode 創建一個新的項目。

環境配置

SDK 環境依賴

MPIDRSSDK 為動態庫,支持 iOS 9.0 及以上系統。

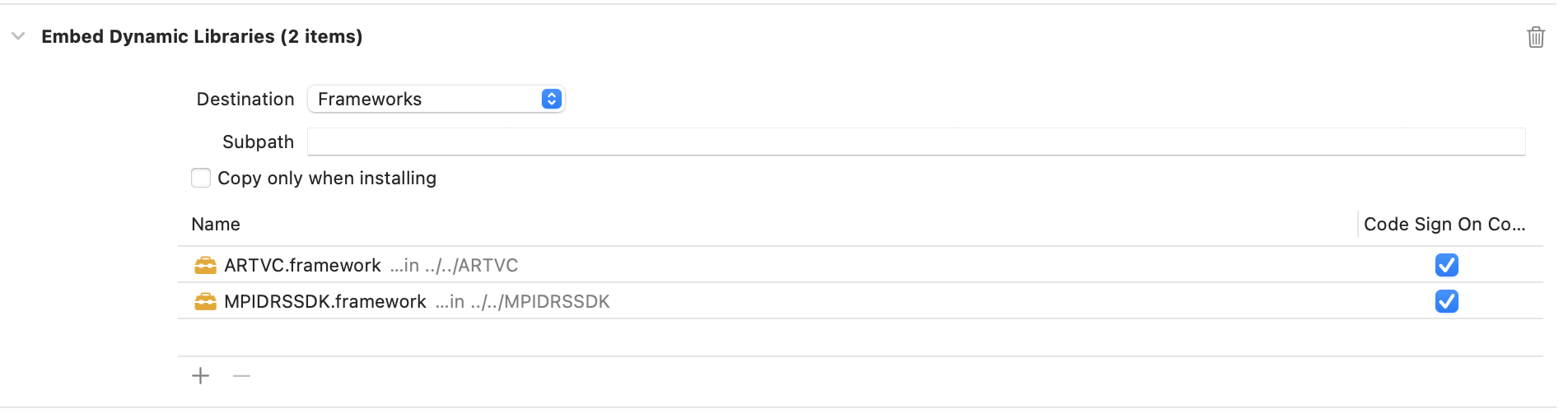

進入 Targets > Build Phases,添加 MPIDRSSDK 及 ARTVC 依賴庫,如下圖所示。

參數配置

進入 Targets > Build Phases,添加 MPIDRSSDK、ARTVC 動態庫,如下圖所示。

添加 MPIDRSSDK.bundle 資源,如下圖所示。

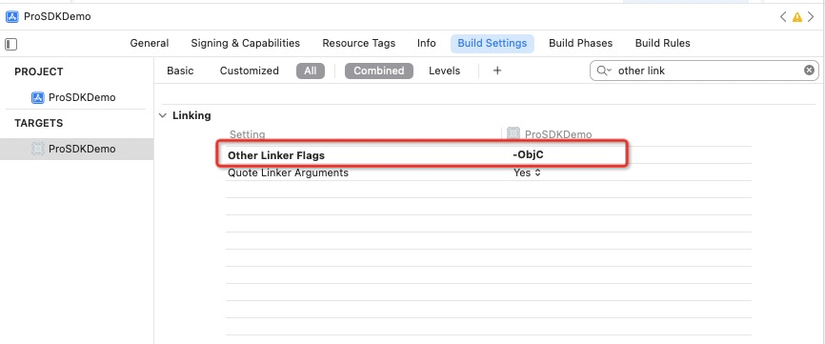

將 Other Linker Flags 設置為 -ObjC,如下圖所示。

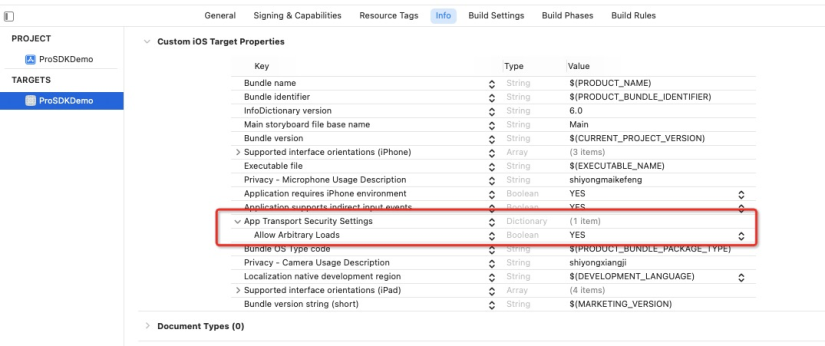

增加支持 HTTP 協議允許,如下圖所示。

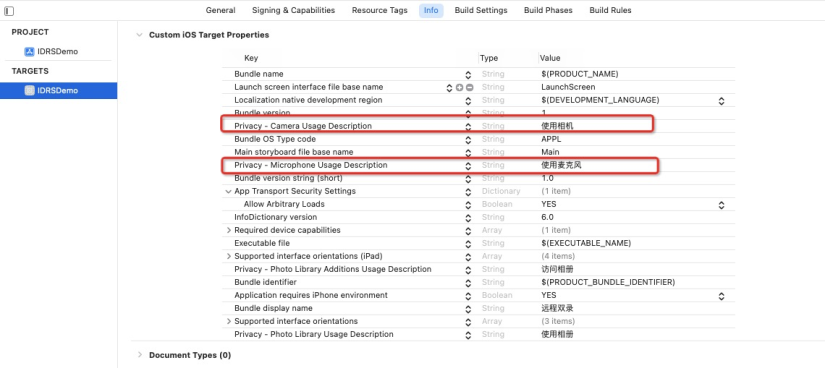

允許使用相機、麥克風權限。

使用示例

初始化 MPIDRSSDK

NSString * userId = [[DemoSetting sharedInstance] userId];

IDRSModelType type = [DemoSetting getSettingConfig];

// IDRSRecordRemote 遠程雙錄模式

[MPIDRSSDK initWithRecordType:IDRSRecordRemote

userId:userId

appId:AppId

packageName:PackageName

AK:Ak

SK:Sk

model:type

addDelegate:self

success:^(id responseObject) {

self.idrs = responseObject;

self.idrs.nui_tts_delegate = self;

} failure:^(NSError *error)

[self showToastWith:@"sdk init error" duration:2];

NSLog(@"%@",error);

}];語音播報

//通過代理能拿到語音合成數據、播報狀態以及語音識別回調,詳情見下面 IDRSNUITTSDelegate

self.idrsSDK.nui_tts_delegate = self;

NSString *ttsString = @"智能雙錄質檢是螞蟻集團移動開發平臺團隊與阿里巴巴達摩院共同研制的一款智能化的音視頻內容錄制、檢測及審核產品";

// 設置語音播報速度

[self.idrsSDK setTTSParam:@"speed_level" value:@"1.5"];

// 開始播放

[self.idrsSDK startTTSWithText:ttsString];

// 結束TTS合成

[self.idrsSDK stopTTS];

// 暫停當前播放(與resume相反)

[self.idrsSDK pauseTTS];

// 重啟當前播放(與pause相反)

[self.idrsSDK resumeTTS];IDRSNUITTSDelegate

/// player播放完成

- (void)onPlayerDidFinish;

/// tts合成狀態

/// @param event 狀態

/// @param taskid 任務id

/// @param code code

- (void)onNuiTtsEventCallback:(ISDRTtsEvent)event taskId:(NSString *)taskid code:(int)code;

/// tts合成數據

/// @param info 文本信息

/// @param info_len 下角標

/// @param buffer 音頻數據流

/// @param len 數據流長度

/// @param task_id 任務id

- (void)onNuiTtsUserdataCallback:(NSString *)info infoLen:(int)info_len buffer:(char*)buffer len:(int)len taskId:(NSString *)task_id;

/// 激活詞識別結果

/// @param result 激活詞識別的結果

- (void)onNuiKwsResultCallback:(NSString *)result;語音識別

可識別的激活詞請參見 MPIDRSSDK.bundle/mandarin/kws/keywords。

// 開啟激活詞檢測

[self.idrsSDK startDialog];

// 停止激活詞檢測

[self.idrsSDK stopDialog];

//如果檢測非本地麥克風收集的聲音,需使用:

/**

激活詞外部輸入數據

@param voiceFrame 音頻數據(需要:pcm、單聲道、16k采樣率)

*/

- (void)feedAudioFrame:(NSData*)voiceFrame;

人臉檢測

檢測人臉特征。

//這里以IDRSFaceDetectInputTypePixelBuffer為例,更多支持類型見:IDRSFaceDetectParam CVImageBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); IDRSFaceDetectParam *detectParam = [[IDRSFaceDetectParam alloc]init]; detectParam.dataType = IDRSFaceDetectInputTypePixelBuffer; detectParam.buffer = pixelBuffer; detectParam.inputAngle = inAngle; detectParam.outputAngle = outAngle; // 輸出人臉特征值結果 NSArray<FaceDetectionOutput *> *detectedFace = [_idrsSDK detectFace:detectParam];進行人臉比對。

// 兩個人臉特征值比對結果 float similarity = [self.idrsSDK faceRecognitionSimilarity:detectedFace1.feature feature2:detectedFace2.face1Feature];人臉追蹤。

//這里以IDRSFaceDetectInputTypePixelBuffer為例,更多支持類型見:IDRSFaceDetectParam CVImageBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); IDRSFaceDetectParam *detectParam = [[IDRSFaceDetectParam alloc]init]; detectParam.dataType = IDRSFaceDetectInputTypePixelBuffer; detectParam.buffer = pixelBuffer; detectParam.inputAngle = inAngle; detectParam.outputAngle = outAngle; detectParam.supportFaceLiveness = YES; // 回調人臉特征信息,包括人臉區域等。 [self.idrsSDK faceTrackFromVideo:detectParam faceDetectionCallback:^(NSError *error, NSArray<FaceDetectionOutput*> *faces) { }];

手勢檢測

動態手勢檢測,如手持手機簽字。

CVImageBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); IDRSHandDetectParam *handParam = [[IDRSHandDetectParam alloc]init]; handParam.dataType = IDRSHandInputTypeBGRA; handParam.buffer = pixelBuffer; handParam.outAngle = 0; NSArray<HandDetectionOutput *> *handResults = [self.idrsSDK detectHandGesture:handParam];靜態手勢檢測。

CVImageBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); IDRSHandDetectParam *handParam = [[IDRSHandDetectParam alloc]init]; handParam.dataType = IDRSHandInputTypeBGRA; handParam.buffer = pixelBuffer; handParam.outAngle = 0; NSArray<HandDetectionOutput *> *handResults = [self.idrsSDK detectHandStaticGesture:handParam];

簽名類型檢測

UIImage * image = [self.idrs getImageFromRPVideo:newBuffer];

NSArray<NSNumber*> *kXMediaOptionsROIKey = @[@(0.2),@(0.2),@(0.6),@(0.6)];

//返回簽名可信度結果

NSArray<IDRSSignConfidenceCheck *>*sings = [self.idrs checkSignClassifyWithImage:image AndROI:kXMediaOptionsROIKey];身份證檢測

// 這里只列舉了imageBuffer的使用方式,其他方式參數詳見 IDRSIDCardDetectParam

CVImageBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

IDRSIDCardDetectParam *detectParam = [[IDRSIDCardDetectParam alloc]init];

detectParam.buffer = pixelBuffer;

detectParam.dataType = IDRSIDCardInputTypePixelBuffer;

NSArray<NSNumber*> *kXMediaOptionsROIKey = @[@(0.2),@(0.2),@(0.6),@(0.6)];

// 身份證信息

IDCardDetectionOutput *ocrOutPut = [_idrsSDK detectIDCard:detectParam roiKey:kXMediaOptionsROIKey rotate:angleDic[@"outAngle"] isFrontCamera:NO isDetectFrontIDCard:isDetectFront];釋放

/// 釋放資源

- (void)releaseResources;常用功能

/// 獲取SDK版本號

+ (NSString*)getVersion;

/// 將相機中獲取的sampleBuffer保存到相冊

/// @param sampleBuffer CMSampleBufferRef

- (void)saveSampleBufferFromCamera: (CMSampleBufferRef)sampleBuffer;

/// 將rtc視頻流轉成圖片

/// @param imageBuffer CVPixelBufferRef、CVPixelBufferRef

- (UIImage *) getImageFromRPVideo: (CVImageBufferRef)imageBuffer;使用 MRTC

下面僅列舉了遠程雙錄中可能用到的部分 MRTC 接口,關于 MRTC 接口的更多信息請參見 iOS 進階功能。

初始化 MRTC 實例

MPIDRSSDK 可以用來初始化 MRTC 實例,您獲取 MRTC 實例之后可以配置音視頻通話邏輯,以下為 Demo 配置。

[MPIDRSSDK initRTCWithUserId:self.uid appId:AppId success:^(id _Nonnull responseObject) {

self.artvcEgnine = responseObject;

// 音視頻通話相關狀態回調delegate

self.artvcEgnine.delegate = self;

//設置視頻編碼分辨率,默認是 ARTVCVideoProfileType_640x360_15Fps。

self.artvcEgnine.videoProfileType = ARTVCVideoProfileType_1280x720_30Fps;

// 音視頻通話 發布流配置

ARTVCPublishConfig *publishConfig = [[ARTVCPublishConfig alloc] init];

publishConfig.videoProfile = self.artvcEgnine.videoProfileType;

publishConfig.audioEnable = YES;

publishConfig.videoEnable = YES;

self.artvcEgnine.autoPublishConfig = publishConfig;

// 自動推流

self.artvcEgnine.autoPublish = YES;

// 音視頻通話 訂閱配置

ARTVCSubscribeOptions *subscribeOptions = [[ARTVCSubscribeOptions alloc] init];

subscribeOptions.receiveAudio = YES;

subscribeOptions.receiveVideo = YES;

self.artvcEgnine.autoSubscribeOptions = subscribeOptions;

// 自動拉流(訂閱)

self.artvcEgnine.autoSubscribe = YES;

// 如果需要回調本地音頻數據,設置YES

self.artvcEgnine.enableAudioBufferOutput = YES;

// 如果需要回調本地視頻數據,設置YES

self.artvcEgnine.enableCameraRawSampleOutput = YES;

// 聲音模式

self.artvcEgnine.expectedAudioPlayMode = ARTVCAudioPlayModeSpeaker;

// 帶寬不足時保證分辨率優先(幀率下降)還是流暢度優先 (分辨率下降)

/*

ARTVCDegradationPreferenceMAINTAIN_FRAMERATE, //流暢度優先

ARTVCDegradationPreferenceMAINTAIN_RESOLUTION, //分辨率優先

ARTVCDegradationPreferenceBALANCED, //自動平衡

*/

self.artvcEgnine.degradationPreference = ARTVCDegradationPreferenceMAINTAIN_FRAMERATE;

// 啟動相機預覽,默認使用前置攝像頭,如果設置為 YES 則使用后置攝像頭

[self.artvcEgnine startCameraPreviewUsingBackCamera:NO];

// 沒有房間時選擇創建房間

[IDRSSDK createRoom];

// 或者加入已有房間

// [IDRSSDK joinRoom:self.roomId token:self.rtoken];

} failure:^(NSError * _Nonnull error) {

}];MRTC 代理回調

下面僅列出了部分常用回調 API,更多 API 信息請參見 ARTVCEngineDelegate。

啟動相機預覽后,如果本地 feed 沒有被回調過,則回調后返回一個 ARTVCFeed 對象,可用于關聯后續返回的渲染 View。

- (void)didReceiveLocalFeed:(ARTVCFeed*)localFeed { switch(localFeed.feedType){ case ARTVCFeedTypeLocalFeedDefault: self.localFeed = localFeed; break; case ARTVCFeedTypeLocalFeedCustomVideo: self.customLocalFeed = localFeed; break; case ARTVCFeedTypeLocalFeedScreenCapture: self.screenLocalFeed = localFeed; break; default: break; } }本地和遠端 feed 相關的 renderView 回調。

重要此時不代表 renderView 已經渲染首幀。

- (void)didVideoRenderViewInitialized:(UIView*)renderView forFeed:(ARTVCFeed*)feed { //可觸發 UI 布局,把 renderView add 到 view 層級中去 [self.viewLock lock]; [self.contentView addSubview:renderView]; [self.viewLock unlock]; }本地和遠端 feed 首幀渲染的回調。

//fist video frame has been rendered - (void)didFirstVideoFrameRendered:(UIView*)renderView forFeed:(ARTVCFeed*)feed { }某個 feed 停止渲染的回調。

- (void)didVideoViewRenderStopped:(UIView*)renderView forFeed:(ARTVCFeed*)feed { }創建房間成功,有房間信息回調。

-(void)didReceiveRoomInfo:(ARTVCRoomInfomation*)roomInfo { //拿到房間號、token // roomInfo.roomId // roomInfo.rtoken }創建房間失敗,有 Error 回調。

-(void)didEncounterError:(NSError *)error forFeed:(ARTVCFeed*)feed{ //error.code == ARTVCErrorCodeProtocolErrorCreateRoomFailed }加入房間成功,會有加入房間成功的回調以及房間已有成員的回調。

-(void)didJoinroomSuccess{ } -(void)didParticepantsEntered:(NSArray<ARTVCParticipantInfo*>*)participants{ }加入房間失敗、推流失敗等音視頻通話中出現錯誤的回調。

-(void)didEncounterError:(NSError *)error forFeed:(ARTVCFeed*)feed{ //error.code == ARTVCErrorCodeProtocolErrorJoinRoomFailed }成員離開房間后,房間其他成員會收到成員離開的回調。

-(void)didParticepant:(ARTVCParticipantInfo*)participant leaveRoomWithReason:(ARTVCParticipantLeaveRoomReasonType)reason { }創建或者加入房間成功后開始推流與拉流,推流/拉流過程中,有如下相關狀態回調。

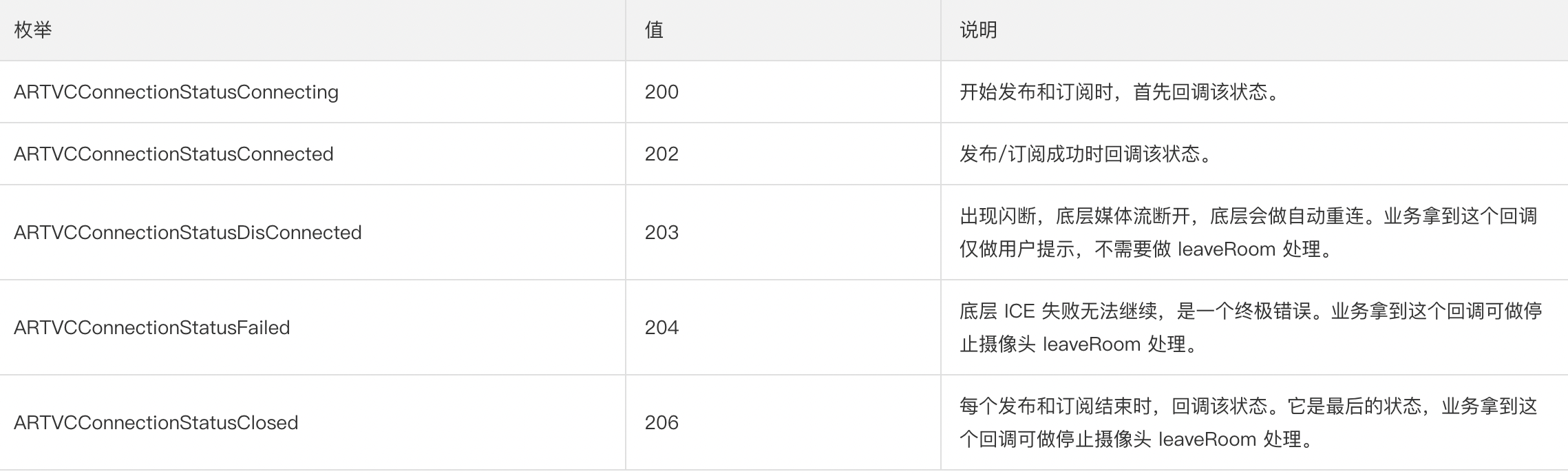

-(void)didConnectionStatusChangedTo:(ARTVCConnectionStatus)status forFeed:(ARTVCFeed*)feed{ [self showToastWith:[NSString stringWithFormat:@"connection status:%d\nfeed:%@",status,feed] duration:1.0]; if((status == ARTVCConnectionStatusClosed) && [feed.uid isEqualToString:[self uid]]){ [self.artvcEgnine stopCameraPreview];//音視頻通話下,停止攝像頭預覽。 [self.artvcEgnine leaveRoom]; } }狀態說明

推流成功后,其他房間成員會收到新 feed 的回調。

-(void)didNewFeedAdded:(ARTVCFeed*)feed { }取消發布后,房間其他成員會收到取消發布的回調。

-(void)didFeedRemoved:(ARTVCFeed*)feed{ }本地流音頻數據回調。

- (void)didOutputAudioBuffer:(ARTVCAudioData*)audioData { if (audioData.audioBufferList->mBuffers[0].mData != NULL && audioData.audioBufferList->mBuffers[0].mDataByteSize > 0) { pcm_frame_t pcmModelInput; pcmModelInput.len = audioData.audioBufferList->mBuffers[0].mDataByteSize; pcmModelInput.buf = (uint8_t*)audioData.audioBufferList->mBuffers[0].mData; pcmModelInput.sample_rate = audioData.sampleRate; pcm_frame_t pcmModelOutput; pcm_resample_16k(&pcmModelInput, &pcmModelOutput); NSData *srcData = [NSData dataWithBytes:pcmModelOutput.buf length:pcmModelOutput.len]; //檢測音頻數據 [self.idrs feedAudioFrame:srcData]; } }遠端流音頻數據回調。

可用來檢測遠端語音,下面示例代碼以檢測遠端激活詞為例。

- (void)didOutputRemoteMixedAudioBuffer:(ARTVCAudioData *)audioData { if (audioData.audioBufferList->mBuffers[0].mData != NULL && audioData.audioBufferList->mBuffers[0].mDataByteSize > 0) { pcm_frame_t pcmModelInput; pcmModelInput.len = audioData.audioBufferList->mBuffers[0].mDataByteSize; pcmModelInput.buf = (uint8_t*)audioData.audioBufferList->mBuffers[0].mData; pcmModelInput.sample_rate = audioData.sampleRate; pcm_frame_t pcmModelOutput; pcm_resample_16k(&pcmModelInput, &pcmModelOutput); NSData *srcData = [NSData dataWithBytes:pcmModelOutput.buf length:pcmModelOutput.len]; //檢測音頻數據 [self.idrs feedAudioFrame:srcData]; } }本地相機流數據回調。

可用來檢測人臉、手勢、簽名類型、身份證等,下方代碼以檢測人臉特征代碼為例。

dispatch_queue_t testqueue = dispatch_queue_create("testQueue", NULL); - (void)didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer { dispatch_sync(testqueue, ^{ @autoreleasepool { CVPixelBufferRef newBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); UIImage * image = [self.idrs getImageFromRPVideo:newBuffer]; IDRSFaceDetectParam *detectParam = [[IDRSFaceDetectParam alloc]init]; detectParam.dataType = IDRSFaceDetectInputTypePixelBuffer; detectParam.buffer = newBuffer; detectParam.inputAngle = 0; detectParam.outputAngle = 0; detectParam.faceNetType = 0; detectParam.supportFaceRecognition = false; detectParam.supportFaceLiveness = false; //人臉追蹤 [self.idrs faceTrackFromVideo:detectParam faceDetectionCallback:^(NSError *error, NSArray<FaceDetectionOutput *> *faces) { dispatch_async(dispatch_get_main_queue(), ^{ self.drawView.faceDetectView.detectResult = faces; }); }]; } }) }自定義推音頻數據流回調時,MRTC 會自動觸發調用,此時則需要在下面這個方法中發送數據。

- (void)didCustomAudioDataNeeded { //[self.artvcEgnine sendCustomAudioData:data]; }

應用內投屏

以下為 MRTC 自帶的應用內投屏代碼示例,如果需要使用跨應用投屏請參見 iOS 跨應用投屏。

開始投屏

ARTVCCreateScreenCaputurerParams* screenParams = [[ARTVCCreateScreenCaputurerParams alloc] init];

screenParams.provideRenderView = YES;

[self.artvcEgnine startScreenCaptureWithParams:screenParams complete:^(NSError* error){

if(error){

[weakSelf showToastWith:[NSString stringWithFormat:@"Error:%@",error] duration:1.0];

}else {

ARTVCPublishConfig* config = [[ARTVCPublishConfig alloc] init];

config.videoSource = ARTVCVideoSourceType_Screen;

config.audioEnable = NO;

config.videoProfile = ARTVCVideoProfileType_ScreenRatio_1280_15Fps;

config.tag = @"MPIDRS_ShareScreen";

[weakSelf.artvcEgnine publish:config];

}

}];停止投屏

- (void)stopScreenSharing {

[self.artvcEgnine stopScreenCapture];

ARTVCUnpublishConfig* config = [[ARTVCUnpublishConfig alloc] init];

config.feed = self.screenLocalFeed;

[self.artvcEgnine unpublish:config complete:^(){

}];

}推送語音流到房間內

自定義推流(音頻數據專用)。

// MRTC 發布自定義推流用于TTS音頻文件 - (void)startCustomAudioCapture{ ARTVCCreateCustomVideoCaputurerParams* params = [[ARTVCCreateCustomVideoCaputurerParams alloc] init]; params.audioSourceType = ARTVCAudioSourceType_Custom; params.customAudioFrameFormat.sampleRate = 16000; params.customAudioFrameFormat.samplesPerChannel = 160; ARTVCPublishConfig* audioConfig = [[ARTVCPublishConfig alloc] init]; audioConfig.videoSource = ARTVCVideoSourceType_Custom; audioConfig.videoEnable = YES; audioConfig.audioSource = ARTVCAudioSourceType_Custom; audioConfig.tag = @"customAuidoFeed"; self.audioConfig = audioConfig; self.customAudioCapturer = [_artvcEgnine createCustomVideoCapturer:params]; _artvcEgnine.autoPublish = NO; [_artvcEgnine publish:self.audioConfig]; }推薦在自定義推流發布成功后開始 TTS 合成播報。

- (void)didConnectionStatusChangedTo:(ARTVCConnectionStatus)status forFeed:(ARTVCFeed*)feed { if (status == ARTVCConnectionStatusConnected && [feed isEqual:self.customLocalFeed]) { NSString * string = @"盛先生您好,被保險人于本附加合同生效(或最后復效)之日起一百八十日內"; self.customAudioData = [[NSMutableData alloc] init]; self.customAudioDataIndex = 0; self.ttsPlaying = YES; [self.idrs setTTSParam:@"extend_font_name" value:@"xiaoyun"]; [self.idrs setTTSParam:@"speed_level" value:@"1"]; [self.idrs startTTSWithText:string]; [self.idrs getTTSParam:@"speed_level"]; } }在 TTS 代理回調中獲取合成進度。

- (void)onNuiTtsUserdataCallback:(NSString *)info infoLen:(int)info_len buffer:(char *)buffer len:(int)len taskId:(NSString *)task_id{ NSLog(@"remote :: onNuiTtsUserdataCallback:%@ -- %d",info,info_len); }在 MRTC 代理回調中發送音頻數據。

- (void)didCustomAudioDataNeeded { NSData * data = [[IDRSBufferTool sharedInstance] bufferTool_read:320]; if (data) { [self.artvcEgnine sendCustomAudioData:data]; } }

開啟服務端錄制

成功初始化 MPIDRSSDK 和 MRTC 實例后相關錄制功能才生效。

開啟遠程錄制。

每開啟一次服務端錄制任務,錄制回調則返回一個錄制 ID,錄制 ID 可用來停止、變更對應的錄制任務。

MPRemoteRecordInfo *recordInfo = [[MPRemoteRecordInfo alloc] init]; recordInfo.roomId = self.roomId; // 控制臺水印id //recordInfo.waterMarkId = self.watermarkId; recordInfo.tagFilter = tagPrefix; recordInfo.userTag = self.uid; recordInfo.recordType = MPRemoteRecordTypeBegin; // 業務根據實際情況傳入是單流還是合流(混流) recordInfo.fileSuffix = MPRemoteRecordFileSingle; // 如果錄制時有流布局要求,可參考MPRemoteRecordInfo自定義 // recordInfo.tagPositions = tagModelArray; // 如果錄制時有端上自定義水印要求,可參考MPRemoteRecordInfo自定義 //recordInfo.overlaps = customOverlaps; [MPIDRSSDK executeRemoteRecord:recordInfo waterMarkHandler:^(NSError * _Nonnull error) { }];變更錄制配置。

已經開啟的錄制任務,支持修改水印和流布局。

MPRemoteRecordInfo *recordInfo = [[MPRemoteRecordInfo alloc] init]; recordInfo.roomId = self.roomId; recordInfo.recordType = MPRemoteRecordTypeChange; // 1、錄制任務id recordInfo.recordId = recordId; // 2、流布局 recordInfo.tagPositions = tagModelArray; // 3、水印 recordInfo.overlaps = customOverlaps; [MPIDRSSDK executeRemoteRecord:recordInfo waterMarkHandler:^(NSError * _Nonnull error) { }];MRTC 錄制回調。

說明開啟錄制成功后需要上報一次錄制任務。

- (void)didReceiveCustomSignalingResponse:(NSDictionary *)dictionary { id opcmdObject = [dictionary objectForKey:@"opcmd"]; if ([opcmdObject isKindOfClass:[NSNumber class]]) { int opcmd = [opcmdObject intValue]; switch (opcmd) { case MPRemoteRecordTypeBeginResponse: { self.startTime = [NSDate date]; // 回調錄制id self.recordId = [dictionary objectForKey:@"recordId"]; if ([[dictionary objectForKey:@"msg"] isEqualToString:@"SUCCESS"]) { NSLog(@"開啟錄制成功,上報錄制任務"); [self uploadOpenRecordInfo]; }else { NSLog(@"開啟錄制失敗,此時業務需重新開始錄制或者提醒業務"); } } break; case MPRemoteRecordTypeChangeResponse: { if ([[dictionary valueForKey:@"msg"] isEqualToString:@"SUCCESS"]) { NSLog(@"修改錄制配置成功"); }else { NSLog(@"修改錄制配置失敗"); } } break; case MPRemoteRecordTypeStopResponse: { if ([[dictionary valueForKey:@"msg"] isEqualToString:@"SUCCESS"]) { NSLog(@"結束錄制成功,如果有result文件產生 則上報文件"); [self uploadRecordResult]; }else { NSLog(@"結束錄制錯誤"); } } break; default: break; } } } - (void)uploadOpenRecordInfo { IDRSUploadManagerParam *param = [[IDRSUploadManagerParam alloc] init]; param.appId = AppId; param.ak = Ak; param.sk = Sk; param.type = IDRSRecordRemote; param.recordAt = self.startTime; param.roomId = self.roomId; param.rtcRecordId = dictionary[@"recordId"]; // 業務自定義角色,跟隨視頻流tag一致即可 param.role = @"customRole"; // 合流視頻 / 單流視頻 param.videoType = IDRSRemoteRecordMix; param.outerBusinessId = [DemoSetting sharedInstance].outBusinessId; IDRSUploadManager *update = [[IDRSUploadManager alloc] init]; [update uploadFileWithParam:param success:^(id _Nonnull responseObject) { if ([[responseObject objectForKey:@"Code"] isEqualToString:@"OK"]) { [self showToastWith:@"result文件上傳成功" duration:2]; } } failure:^(NSError * _Nonnull error, IDRSUploadManagerParam * _Nonnull upLoadParam) { if (upLoadParam) { [self showToastWith:@"result文件上傳失敗,請儲存上傳信息、下次再次上傳" duration:3]; } }]; }停止指定的服務端錄制任務。

[MPIDRSSDK stopRemoteRecord:@"錄制id"];結束錄制最終上傳錄制信息。

說明如果沒有 reslut 文件,則錄制結束時無需再次上報。

開啟多次錄制時,需要上報多次。

- (void)uploadRecordResult { IDRSUploadFile * filesss = [[IDRSUploadFile alloc] init]; filesss.fileName = @"filename"; filesss.filePath = @"filepath"; IDRSUploadManagerParam *param = [[IDRSUploadManagerParam alloc] init]; param.files = [NSArray arrayWithObjects:filesss, nil]; param.appId = AppId; param.ak = Ak; param.sk = Sk; param.type = IDRSRecordRemote; param.recordAt = self.startTime; param.roomId = self.roomId; param.rtcRecordId = dictionary[@"recordId"]; // 業務自定義角色,跟隨視頻流tag一致即可 param.role = @"customRole"; // 合流視頻 / 單流視頻 param.videoType = IDRSRemoteRecordMix; param.outerBusinessId = [DemoSetting sharedInstance].outBusinessId; IDRSUploadManager *update = [[IDRSUploadManager alloc] init]; [update uploadFileWithParam:param success:^(id _Nonnull responseObject) { if ([[responseObject objectForKey:@"Code"] isEqualToString:@"OK"]) { [self showToastWith:@"result文件上傳成功" duration:2]; } } failure:^(NSError * _Nonnull error, IDRSUploadManagerParam * _Nonnull upLoadParam) { if (upLoadParam) { [self showToastWith:@"result文件上傳失敗,請儲存上傳信息、下次再次上傳" duration:3]; } }]; }