Search分詞器

分詞器可以將長(zhǎng)文檔解析、拆分為多個(gè)詞,存入索引中。在多數(shù)場(chǎng)景下,您可以直接使用TairSearch提供的多種內(nèi)置分詞器,同時(shí)您也可以按需自定義分詞器。本文介紹TairSearch分詞器的使用方法。

導(dǎo)航

內(nèi)置分詞器 | Character Filter | Tokenizer | Token Filter |

分詞器的工作流程

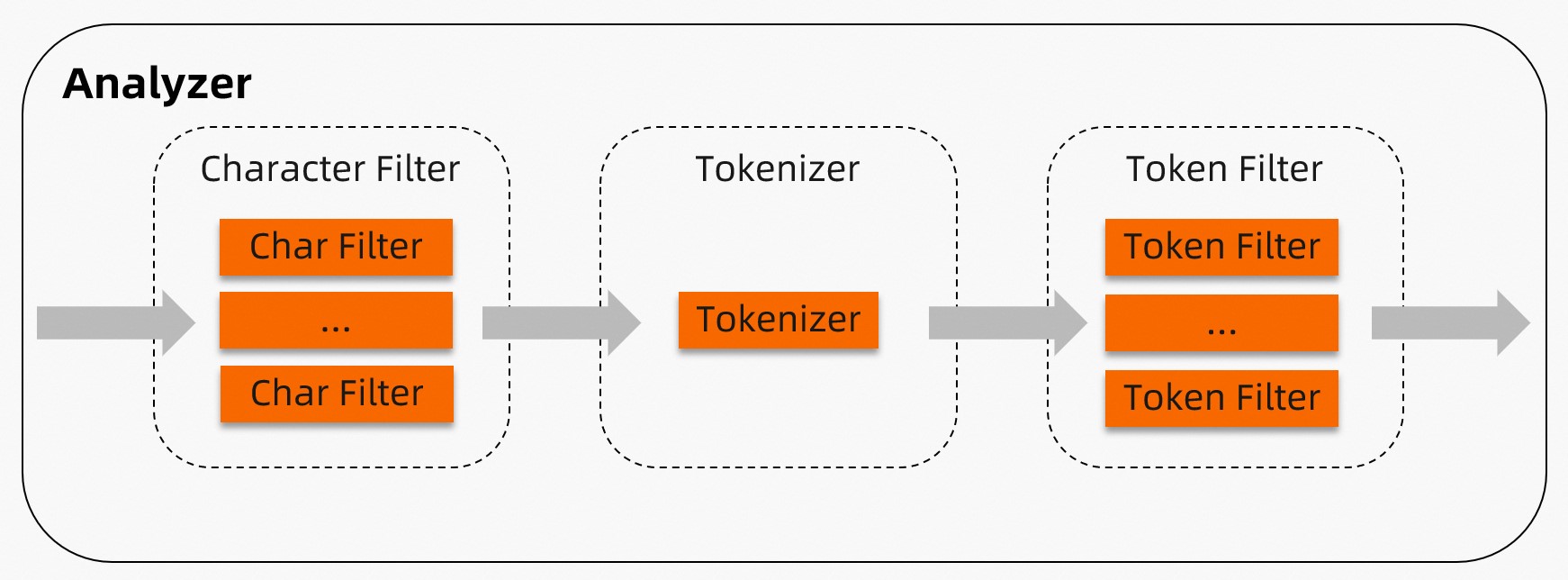

TairSearch分詞器由Character Filter、Tokenizer和Token Filter三部分組成,其工作流程依次為Character Filter、Tokenizer和Token Filter,其中Character Filter和Token Filter可以為空。 其具體作用如下:

其具體作用如下:

Character Filter:負(fù)責(zé)將文檔進(jìn)行預(yù)處理,每個(gè)分詞器可以配置零個(gè)或者多個(gè)Character Filter,多個(gè)Character Filter會(huì)按照指定順序執(zhí)行。例如將

"(:"字符替換成"happy"字符。Tokenizer:負(fù)責(zé)將輸入的文檔拆分成多個(gè)Token(詞元),每個(gè)分詞器僅能配置一個(gè)Tokenizer。例如通過Whitespace Tokenizer將

"I am very happy"拆分成["I", "am", "very", "happy"]。Token Filter:負(fù)責(zé)對(duì)Tokenizer產(chǎn)生的Token進(jìn)行處理,每個(gè)分詞器可以配置零個(gè)或者多個(gè)Token Filter,多個(gè)Token Filter會(huì)按照指定順序執(zhí)行。例如通過Stop Token Filter過濾停用詞(Stopwords)。

內(nèi)置分詞器

Standard

基于Unicode文本切割算法拆分文檔,并將Token(詞元,Tokenizer的結(jié)果)轉(zhuǎn)為小寫、過濾停用詞,適用于多數(shù)語(yǔ)言。

組成部分:

Tokenizer(分詞器):Standard Tokenizer。

Token Filter(詞元過濾器):LowerCase Token Filter和Stop Token Filter。

未展示Character Filter(字符過濾器)表示無Character Filter。

可選參數(shù):

stopwords:停用詞,分詞器會(huì)過濾這些詞。數(shù)組類型,單個(gè)停用詞必須是字符串。配置后,會(huì)覆蓋默認(rèn)停用詞。默認(rèn)停用詞如下:

["a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "such", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with"]max_token_length:每個(gè)Token的長(zhǎng)度上限,默認(rèn)為255。若Token超過該長(zhǎng)度,會(huì)根據(jù)指定的長(zhǎng)度上限對(duì)Token進(jìn)行拆分。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"standard"

}

}

}

}

# 自定義停用詞配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_analyzer":{

"type":"standard",

"max_token_length":10,

"stopwords":[

"memory",

"disk",

"is",

"a"

]

}

}

}

}

}Stop

根據(jù)非字母(non-letter)的符號(hào)拆分文檔,并將Token轉(zhuǎn)為小寫,同時(shí)過濾停用詞。

組成部分:

Tokenizer:LowerCase Tokenizer。

Token Filter:Stop Token Filter。

可選參數(shù):

stopwords:停用詞,分詞器會(huì)過濾這些詞。數(shù)組類型,單個(gè)停用詞必須是字符串。配置后,會(huì)覆蓋默認(rèn)停用詞。默認(rèn)停用詞如下:

["a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "such", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with"]

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"stop"

}

}

}

}

# 自定義停用詞配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_analyzer":{

"type":"stop",

"stopwords":[

"memory",

"disk",

"is",

"a"

]

}

}

}

}

}Jieba

推薦的中文分詞器,可以按照預(yù)先訓(xùn)練好的詞典或者指定的詞典拆分文檔,采用Jieba搜索引擎模式,同時(shí)將英文Token轉(zhuǎn)為小寫,并過濾停用詞。

組成部分:

Tokenizer:Jieba Tokenizer。

Token Filter:LowerCase Token Filter和Stop Token Filter。

可選參數(shù):

userwords:自定義詞典,數(shù)組類型,單個(gè)詞必須是字符串。配置后會(huì)追加至默認(rèn)詞典中,默認(rèn)詞典請(qǐng)參見Jieba默認(rèn)詞典。

重要為了更好的分詞效果,Jieba內(nèi)置了一個(gè)較大的詞典,約占用20 MB內(nèi)存,該詞典在內(nèi)存中僅會(huì)保留一份。在首次使用Jieba時(shí)才會(huì)加載詞典,這可能會(huì)導(dǎo)致首次使用Jieba分詞器時(shí)延時(shí)出現(xiàn)微小的抖動(dòng)。

自定義詞典的單詞中不能出現(xiàn)空格與特殊字符:

\t、\n、,和。。

use_hmm:對(duì)于字典中不存在的詞,是否使用隱式馬爾科夫鏈模型判斷成詞,取值為true(默認(rèn),表示開啟)或false(不開啟)。

stopwords:停用詞,分詞器會(huì)過濾這些詞。數(shù)組類型,單個(gè)停用詞必須是字符串。配置后,會(huì)覆蓋默認(rèn)停用詞。默認(rèn)停用詞請(qǐng)參見Jieba默認(rèn)停用詞。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"jieba"

}

}

}

}

# 自定義停用詞配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_analyzer":{

"type":"jieba",

"stopwords":[

"memory",

"disk",

"is",

"a"

],"userwords":[

"Redis",

"開源免費(fèi)",

"靈活"

],

"use_hmm":true

}

}

}

}

}IK

中文分詞器,兼容ES的IK分詞器插件。分為ik_max_word和ik_smart模式,ik_max_word模式會(huì)拆分出文檔中所有可能存在的Token,ik_smart模式會(huì)在ik_max_word的基礎(chǔ)上,對(duì)Token進(jìn)行二次識(shí)別,選擇出最有可能的Token。

以“Redis是完全開源免費(fèi)的,遵守BSD協(xié)議,是一個(gè)靈活的高性能key-value數(shù)據(jù)結(jié)構(gòu)存儲(chǔ),可以用來作為數(shù)據(jù)庫(kù)、緩存和消息隊(duì)列。Redis比其他key-value緩存產(chǎn)品有以下三個(gè)特點(diǎn):Redis支持?jǐn)?shù)據(jù)的持久化,可以將內(nèi)存中的數(shù)據(jù)保存在磁盤中,重啟的時(shí)候可以再次加載到內(nèi)存使用。”文檔為例,ik_max_word和ik_smart的Token如下:

ik_max_word:redis 是 完全 全開 開源 免費(fèi) 的 遵守 bsd 協(xié)議 是 一個(gè) 一 個(gè) 靈活 的 高性能 性能 key-value key value 數(shù)據(jù)結(jié)構(gòu) 數(shù)據(jù) 結(jié)構(gòu) 存儲(chǔ) 可以用 可以 用來 來作 作為 數(shù)據(jù)庫(kù) 數(shù)據(jù) 庫(kù) 緩存 和 消息 隊(duì)列 redis 比 其他 key-value key value 緩存 產(chǎn)品 有 以下 三個(gè) 三 個(gè) 特點(diǎn) redis 支持 數(shù)據(jù) 的 持久 化 可以 將 內(nèi)存 中 的 數(shù)據(jù) 保存 存在 磁盤 中 重啟 的 時(shí)候 可以 再次 加載 載到 內(nèi)存 使用ik_smart:redis 是 完全 開源 免費(fèi) 的 遵守 bsd 協(xié)議 是 一個(gè) 靈活 的 高性能 key-value 數(shù)據(jù)結(jié)構(gòu) 存儲(chǔ) 可以 用來 作為 數(shù)據(jù)庫(kù) 緩存 和 消息 隊(duì)列 redis 比 其他 key-value 緩存 產(chǎn)品 有 以下 三個(gè) 特點(diǎn) redis 支持 數(shù)據(jù) 的 持久 化 可以 將 內(nèi)存 中 的 數(shù)據(jù) 保 存在 磁盤 中 重啟 的 時(shí)候 可以 再次 加 載到 內(nèi)存 使用

組成部分:

Tokenizer:IK Tokenizer。

可選參數(shù):

stopwords:停用詞,分詞器會(huì)過濾這些詞。數(shù)組類型,單個(gè)停用詞必須是字符串。配置后,會(huì)覆蓋默認(rèn)停用詞。默認(rèn)停用詞如下:

["a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "such", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with"]userwords:自定義詞典,數(shù)組類型,單個(gè)詞必須是字符串,配置后會(huì)追加至默認(rèn)詞典中。默認(rèn)詞典請(qǐng)參見IK默認(rèn)詞典。

quantifiers:自定義量詞詞典,數(shù)組類型,單個(gè)詞必須是字符串,配置后會(huì)追加至默認(rèn)量詞詞典中。默認(rèn)量詞詞典請(qǐng)參見IK默認(rèn)量詞詞典。

enable_lowercase:是否將大寫字母轉(zhuǎn)換為小寫,取值為true(默認(rèn),表示開啟)或false(不開啟)。

重要由于本參數(shù)所控制的操作(將大寫字母轉(zhuǎn)換為小寫)會(huì)發(fā)生在分詞之前,若自定義詞典中存在大寫字母,請(qǐng)將本參數(shù)設(shè)置為false。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"ik_smart"

},

"f1":{

"type":"text",

"analyzer":"ik_max_word"

}

}

}

}

# 自定義停用詞配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_ik_smart_analyzer"

},

"f1":{

"type":"text",

"analyzer":"my_ik_max_word_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_ik_smart_analyzer":{

"type":"ik_smart",

"stopwords":[

"memory",

"disk",

"is",

"a"

],"userwords":[

"Redis",

"開源免費(fèi)",

"靈活"

],

"quantifiers":[

"納秒"

],

"enable_lowercase":false

},

"my_ik_max_word_analyzer":{

"type":"ik_max_word",

"stopwords":[

"memory",

"disk",

"is",

"a"

],"userwords":[

"Redis",

"開源免費(fèi)",

"靈活"

],

"quantifiers":[

"納秒"

],

"enable_lowercase":false

}

}

}

}

}Pattern

根據(jù)指定的正則表達(dá)式拆分文檔,正則表達(dá)式匹配的詞將作為分隔符。例如指定的正則表達(dá)式是"aaa",對(duì)"bbbaaaccc"文檔進(jìn)行分詞,會(huì)得到"bbb"和"ccc",同時(shí)根據(jù)lowercase參數(shù)決定是否將英文Token轉(zhuǎn)為小寫,并過濾停用詞。

組成部分:

Tokenizer:Pattern Tokenizer。

Token Filter:LowerCase Token Filter和Stop Token Filter。

可選參數(shù):

pattern:正則表達(dá)式,正則表達(dá)式匹配的詞將作為分隔符,默認(rèn)為

\W+,更多語(yǔ)法信息請(qǐng)參見Re2。stopwords:停用詞,分詞器會(huì)過濾這些詞。配置時(shí),停用詞詞典必須是一個(gè)數(shù)組,每個(gè)停用詞必須是字符串,配置停用詞后會(huì)覆蓋默認(rèn)停用詞。默認(rèn)停用詞如下:

["a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "such", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with"]lowercase:是否將Token轉(zhuǎn)換為小寫,取值為true(默認(rèn),表示開啟)或false(不開啟)。

flags:正則表達(dá)式是否大小寫敏感,默認(rèn)為空(表示大小寫敏感),取值為CASE_INSENSITIVE(表示大小寫不敏感)。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"pattern"

}

}

}

}

# 自定義停用詞配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_analyzer":{

"type":"pattern",

"pattern":"\\'([^\\']+)\\'",

"stopwords":[

"aaa",

"@"

],

"lowercase":false,

"flags":"CASE_INSENSITIVE"

}

}

}

}

}Whitespace

根據(jù)空格拆分文檔。

組成部分:

Tokenizer:Whitespace Tokenizer。

可選參數(shù):無

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"whitespace"

}

}

}

}Simple

根據(jù)非字母(non-letter)的符號(hào)拆分文檔,將Token轉(zhuǎn)為小寫。

組成部分:

Tokenizer:LowerCase Tokenizer。

可選參數(shù):無

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"simple"

}

}

}

}Keyword

不拆分文檔,將文檔作為一個(gè)Token輸出。

組成部分:

Tokenizer:Keyword Tokenizer。

可選參數(shù):無

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"keyword"

}

}

}

}Language

支持多國(guó)語(yǔ)言分詞器,包括:chinese、arabic、cjk、brazilian、czech、german、greek、persian、french、dutch和russian。

可選參數(shù):

stopwords:停用詞,分詞器會(huì)過濾這些詞。配置時(shí),停用詞詞典必須是一個(gè)數(shù)組,每個(gè)停用詞必須是字符串,配置停用詞后會(huì)覆蓋默認(rèn)停用詞。各語(yǔ)言的默認(rèn)停用詞請(qǐng)參見附錄4:內(nèi)置分詞器Language各語(yǔ)言的默認(rèn)停用詞(Stopwords)。

說明暫不支持修改chinese分詞器的停用詞。

stem_exclusion:指定不需要進(jìn)行詞干化處理的詞(Term),例如

"apples"進(jìn)行詞干化處理后為"apple"。本參數(shù)默認(rèn)為空,配置時(shí),stem_exclusion必須是一個(gè)數(shù)組,每個(gè)詞必須是字符串。說明僅brazilian、german、french和dutch分詞器支持本參數(shù)。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"arabic"

}

}

}

}

# 自定義停用詞配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_analyzer":{

"type":"german",

"stopwords":[

"ein"

],

"stem_exclusion":[

"speicher"

]

}

}

}

}

}自定義分詞器

TairSearch分詞器的工作流程依次為Character Filter、Tokenizer和Token Filter,您可以按需配置Character Filter、Tokenizer和Token Filter參數(shù)。

配置方法:在properties中配置analyzer為自定義分詞器,例如my_custom_analyzer,在settings中,指定自定義分詞器(my_custom_analyzer)的相關(guān)配置。

參數(shù)說明:

參數(shù) | 說明 |

type(必選) | 固定為custom,表示自定義分詞器。 |

char_filter(可選) | 字符過濾器,在開始Tokenizer流程前,對(duì)文檔進(jìn)行預(yù)處理,默認(rèn)為空,表示不進(jìn)行預(yù)處理,當(dāng)前僅支持Mapping。 參數(shù)說明:

|

tokenizer(必選) | 分詞器,必選且只能選擇一個(gè),取值為:whitespace、lowercase、standard、classic、letter、keyword、jieba、pattern、ik_max_word和ik_smart,更多信息請(qǐng)參見附錄2:支持的Tokenizer。 |

filter(可選) | 詞元過濾器,對(duì)Token(Tokenizer的結(jié)果)進(jìn)行處理,例如刪除停用詞、將詞元轉(zhuǎn)換為小寫等,支持多選,默認(rèn)為空,表示不進(jìn)行處理。 取值為:classic、elision、lowercase、snowball、stop、asciifolding、length、arabic_normalization和persian_normalization,更多信息請(qǐng)參見附錄3:支持的Token Filter。 |

配置示例:

# 自定義分詞器配置:

# 本示例配置了名為emoticons和conjunctions的Character Filter,同時(shí)配置了Whitespace Tokenizer以及Lowercase Token Filter和Stop Token Filter。

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":[

"lowercase",

"stop"

],

"char_filter": [

"emoticons",

"conjunctions"

]

}

},

"char_filter":{

"emoticons":{

"type":"mapping",

"mappings":[

":) => _happy_",

":( => _sad_"

]

},

"conjunctions":{

"type":"mapping",

"mappings":[

"&=>and"

]

}

}

}

}

}附錄1:支持的Character Filter

Mapping Character Filter

可通過mappings參數(shù)配置Key-Value映射關(guān)系,當(dāng)匹配到Key字符,則用對(duì)應(yīng)Value進(jìn)行替換,例如":) =>_happy_",表示":)"會(huì)被"_happy_"替換。支持配置多個(gè)過濾器。

參數(shù)說明:

mappings(必填):數(shù)組類型,每個(gè)元素必須包含

=>,例如"&=>and"。

配置示例:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"standard",

"char_filter": [

"emoticons"

]

}

},

"char_filter":{

"emoticons":{

"type":"mapping",

"mappings":[

":) => _happy_",

":( => _sad_"

]

}

}

}

}

}附錄2:支持的Tokenizer

whitespace

根據(jù)空格拆分文檔。

可選參數(shù):

max_token_length:每個(gè)Token的長(zhǎng)度上限,默認(rèn)為255。若Token超過該長(zhǎng)度,會(huì)根據(jù)指定的長(zhǎng)度上限對(duì)Token進(jìn)行拆分。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"whitespace"

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"token1"

}

},

"tokenizer":{

"token1":{

"type":"whitespace",

"max_token_length":2

}

}

}

}

}standard

基于Unicode文本切割算法拆分文檔,適用于多數(shù)語(yǔ)言。

可選參數(shù):

max_token_length:每個(gè)Token的長(zhǎng)度上限,默認(rèn)為255。若Token超過該長(zhǎng)度,會(huì)根據(jù)指定的長(zhǎng)度上限對(duì)Token進(jìn)行拆分。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"standard"

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"token1"

}

},

"tokenizer":{

"token1":{

"type":"standard",

"max_token_length":2

}

}

}

}

}classic

根據(jù)英文語(yǔ)法拆分文檔,并且會(huì)對(duì)縮寫詞、公司名稱、電子郵件地址和互聯(lián)網(wǎng)IP地址進(jìn)行特殊處理,詳細(xì)說明如下。

按標(biāo)點(diǎn)符號(hào)拆分單詞,并刪除標(biāo)點(diǎn)符號(hào),但沒有空格的英文句號(hào)會(huì)被認(rèn)為是Token的一部分,例如

red.apple不會(huì)被拆分,red.[space] apple會(huì)被拆分為red和apple。按連字符拆分單詞,若Token中含有數(shù)字,則整個(gè)Token會(huì)被解釋為產(chǎn)品編號(hào)而不會(huì)被拆分。

將電子郵件地址和因特網(wǎng)主機(jī)名識(shí)別為一個(gè)Token。

可選參數(shù):

max_token_length:每個(gè)Token的長(zhǎng)度上限,默認(rèn)為255。若Token超過該長(zhǎng)度,會(huì)被跳過。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"classic"

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"token1"

}

},

"tokenizer":{

"token1":{

"type":"classic",

"max_token_length":2

}

}

}

}

}letter

根據(jù)非字母(non-letter)的符號(hào)拆分文檔,適用于歐洲語(yǔ)言,不適用于亞洲語(yǔ)言。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"letter"

}

}

}

}

}lowercase

根據(jù)非字母(non-letter)的符號(hào)拆分文檔,并將所有Token轉(zhuǎn)為小寫。Lowercase Tokenizer的分詞效果與Letter Tokenizer組合LowerCase Filter的效果相同,但Lowercase Tokenizer可減少一次遍歷。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"lowercase"

}

}

}

}

}keyword

不拆分文檔,將文檔作為一個(gè)Token輸出。通常與Token Filter配合使用,例如Keyword Tokenizer組合Lowercase Token Filter,可實(shí)現(xiàn)將輸入的文檔轉(zhuǎn)為小寫。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"keyword"

}

}

}

}

}jieba

推薦的中文分詞器,可以按照預(yù)先訓(xùn)練好的詞典或者指定的詞典拆分文檔。

可選參數(shù):

userwords:自定義詞典,數(shù)組類型,單個(gè)詞必須是字符串。配置后會(huì)追加至默認(rèn)詞典中,默認(rèn)詞典請(qǐng)參見Jieba默認(rèn)詞典。

重要自定義詞典的單詞中不能出現(xiàn)空格與特殊字符:

\t、\n、,和。。use_hmm:對(duì)于字典中不存在的詞,是否使用隱式馬爾科夫鏈模型判斷成詞,取值為true(默認(rèn),表示開啟)或false(不開啟)。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"jieba"

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f1":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"token1"

}

},

"tokenizer":{

"token1":{

"type":"jieba",

"userwords":[

"Redis",

"開源免費(fèi)",

"靈活"

],

"use_hmm":true

}

}

}

}

}pattern

根據(jù)指定的正則表達(dá)式拆分文檔,正則表達(dá)式匹配的詞可以作為分隔符或者目標(biāo)Token。

可選參數(shù):

pattern:正則表達(dá)式,默認(rèn)為

\W+,更多語(yǔ)法信息請(qǐng)參見Re2。group:指定正則表達(dá)式作為分隔符或目標(biāo)Token,取值如下:

-1(默認(rèn)):指定正則表達(dá)式匹配的詞作為分隔符,例如指定的正則表達(dá)式是

"aaa",對(duì)"bbbaaaccc"文檔進(jìn)行分詞,會(huì)得到"bbb"和"ccc"。0或大于0的整數(shù):指定正則表達(dá)式匹配的詞作為目標(biāo)Token,0表示以整個(gè)正則表達(dá)式進(jìn)行匹配,1或1以上的整數(shù)表示以正則表達(dá)式中的第幾個(gè)捕獲組進(jìn)行匹配。例如指定的正則表達(dá)式是

"a(b+)c",對(duì)"abbbcdefabc"文檔進(jìn)行分詞:當(dāng)group為0時(shí),會(huì)得到"abbbc"和"abc";當(dāng)group為1時(shí),將以"a(b+)c"中的第一個(gè)捕獲組b+進(jìn)行匹配,會(huì)得到"bbb"和"b"。

flags:正則表達(dá)式是否大小寫敏感,默認(rèn)為空(表示大小寫敏感),取值為CASE_INSENSITIVE(表示大小寫不敏感)。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"pattern"

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f1":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"pattern_tokenizer"

}

},

"tokenizer":{

"pattern_tokenizer":{

"type":"pattern",

"pattern":"AB(A(\\w+)C)",

"flags":"CASE_INSENSITIVE",

"group":2

}

}

}

}

}IK

中文分詞器,取值為ik_max_word或ik_smart。ik_max_word會(huì)拆分出文檔中所有可能存在的Token;ik_smart會(huì)在ik_max_word的基礎(chǔ)上,對(duì)Token進(jìn)行二次識(shí)別,選擇出最有可能的Token。

可選參數(shù):

stopwords:停用詞,分詞器會(huì)過濾這些詞。數(shù)組類型,單個(gè)停用詞必須是字符串。配置后,會(huì)覆蓋默認(rèn)停用詞。默認(rèn)停用詞如下:

["a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "such", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with"]userwords:自定義詞典,數(shù)組類型,單個(gè)詞必須是字符串,配置后會(huì)追加至默認(rèn)詞典中。默認(rèn)詞典請(qǐng)參見IK默認(rèn)詞典。

quantifiers:自定義量詞詞典,數(shù)組類型,單個(gè)詞必須是字符串,配置后會(huì)追加至默認(rèn)量詞詞典中。默認(rèn)量詞詞典請(qǐng)參見IK默認(rèn)量詞詞典。

enable_lowercase:是否將大寫字母轉(zhuǎn)換為小寫,取值為true(默認(rèn),表示開啟)或false(不開啟)。

重要由于本參數(shù)所控制的操作(將大寫字母轉(zhuǎn)換為小寫)會(huì)發(fā)生在分詞之前,若自定義詞典中存在大寫字母,請(qǐng)將本參數(shù)設(shè)置為false。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_ik_smart_analyzer"

},

"f1":{

"type":"text",

"analyzer":"my_custom_ik_max_word_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_ik_smart_analyzer":{

"type":"custom",

"tokenizer":"ik_smart"

},

"my_custom_ik_max_word_analyzer":{

"type":"custom",

"tokenizer":"ik_max_word"

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_ik_smart_analyzer"

},

"f1":{

"type":"text",

"analyzer":"my_custom_ik_max_word_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_ik_smart_analyzer":{

"type":"custom",

"tokenizer":"my_ik_smart_tokenizer"

},

"my_custom_ik_max_word_analyzer":{

"type":"custom",

"tokenizer":"my_ik_max_word_tokenizer"

}

},

"tokenizer":{

"my_ik_smart_tokenizer":{

"type":"ik_smart",

"userwords":[

"中文分詞器",

"自定義stopwords"

],

"stopwords":[

"關(guān)于",

"測(cè)試"

],

"quantifiers":[

"納秒"

],

"enable_lowercase":false

},

"my_ik_max_word_tokenizer":{

"type":"ik_max_word",

"userwords":[

"中文分詞器",

"自定義stopwords"

],

"stopwords":[

"關(guān)于",

"測(cè)試"

],

"quantifiers":[

"納秒"

],

"enable_lowercase":false

}

}

}

}

}附錄3:支持的Token Filter

classic

過濾Token中尾部的's和縮略詞中的.,例如會(huì)將Fig.轉(zhuǎn)換為Fig。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"classic",

"filter":["classic"]

}

}

}

}

}elision

過濾指定的元音,常用于法語(yǔ)中。

可選參數(shù):

articles(自定義時(shí)必填):指定的元音,數(shù)組類型,單個(gè)字母必須是字符串,默認(rèn)為

["l", "m", "t", "qu", "n", "s", "j"],配置后會(huì)覆蓋默認(rèn)詞典。articles_case(可選):指定的元音是否大小寫敏感,取值為true(表示大小寫不敏感)或false(默認(rèn),大小寫敏感)。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":["elision"]

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":["elision_filter"]

}

},

"filter":{

"elision_filter":{

"type":"elision",

"articles":["l", "m", "t", "qu", "n", "s", "j"],

"articles_case":true

}

}

}

}

}lowercase

將所有Token轉(zhuǎn)換為小寫。

可選參數(shù):

language:詞元過濾器的語(yǔ)言,只能設(shè)置為greek或russian。若不設(shè)置該參數(shù),則默認(rèn)為英語(yǔ)。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":["lowercase"]

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_greek_analyzer"

},

"f1":{

"type":"text",

"analyzer":"my_custom_russian_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_greek_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":["greek_lowercase"]

},

"my_custom_russian_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":["russian_lowercase"]

}

},

"filter":{

"greek_lowercase":{

"type":"lowercase",

"language":"greek"

},

"russian_lowercase":{

"type":"lowercase",

"language":"russian"

}

}

}

}

}snowball

將所有Token轉(zhuǎn)換為詞干,例如將cats轉(zhuǎn)換為cat。

可選參數(shù):

language:詞元過濾器的語(yǔ)言,取值為english(默認(rèn))、german、french和dutch。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":["snowball"]

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"standard",

"filter":["my_filter"]

}

},

"filter":{

"my_filter":{

"type":"snowball",

"language":"english"

}

}

}

}

}stop

根據(jù)指定的停用詞數(shù)組,過濾Token中出現(xiàn)的停用詞。

可選參數(shù):

stopwords:停用詞數(shù)組,單個(gè)停用詞必須是字符串。配置后,會(huì)覆蓋默認(rèn)停用詞。默認(rèn)停用詞如下:

["a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "such", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with"]ignoreCase:匹配停用詞時(shí)是否大小寫敏感,取值為true(表示大小寫不敏感)或false(默認(rèn),大小寫敏感)。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":["stop"]

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"standard",

"filter":["stop_filter"]

}

},

"filter":{

"stop_filter":{

"type":"stop",

"stopwords":[

"the"

],

"ignore_case":true

}

}

}

}

}asciifolding

將不在基本拉丁文Unicode塊(前127個(gè)ASCII字符)中的字母、數(shù)字和符號(hào)轉(zhuǎn)換為等價(jià)的ASCII字符(如果存在),例如將é轉(zhuǎn)換為e。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"standard",

"filter":["asciifolding"]

}

}

}

}

}length

過濾指定長(zhǎng)度范圍以外的Token。

可選參數(shù):

min:Token的最小長(zhǎng)度,整數(shù),默認(rèn)為0。

max:Token的最大長(zhǎng)度,整數(shù),默認(rèn)為(2^31 - 1)。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":["length"]

}

}

}

}

}

# 自定義配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_custom_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"whitespace",

"filter":["length_filter"]

}

},

"filter":{

"length_filter":{

"type":"length",

"max":5,

"min":2

}

}

}

}

}Normalization

規(guī)范某種語(yǔ)言的特定字符,取值為arabic_normalization或persian_normalization,推薦搭配Standard tokenizer使用。

配置示例:

# 默認(rèn)配置:

{

"mappings":{

"properties":{

"f0":{

"type":"text",

"analyzer":"my_arabic_analyzer"

},

"f1":{

"type":"text",

"analyzer":"my_persian_analyzer"

}

}

},

"settings":{

"analysis":{

"analyzer":{

"my_arabic_analyzer":{

"type":"custom",

"tokenizer":"arabic",

"filter":["arabic_normalization"]

},

"my_persian_analyzer":{

"type":"custom",

"tokenizer":"arabic",

"filter":["persian_normalization"]

}

}

}

}

}附錄4:內(nèi)置分詞器Language各語(yǔ)言的默認(rèn)停用詞(Stopwords)

arabic

["??","???","????","???","??","???","????","???","?","?","??","??","??","?","???","??","?","?","??","??","??","??","??","???","???","???","???","???","??","???","???","???","??","??","???","???","??","??","??","????","????","????","???","???","???","???","???","???","???","???","???","???","????","????","????","????","?????","???","???","???","???","???","?????","????","???","???","???","????","????","??","???","??","???","??","???","??","??","??","???","???","???","???","???","???","???","???","??","???","??","???","???","???","???","????","????","???","????","????","?????","????","???","???","??","???","???","?????","???","???","???","????","????","????","???","???","???","???","?????","???","????"]cjk

["with","will","to","this","there","then","the","t","that","such","s","on","not","no","it","www","was","is","","into","their","or","in","if","for","by","but","they","be","these","at","are","as","and","of","a"]brazilian

["uns","umas","uma","teu","tambem","tal","suas","sobre","sob","seu","sendo","seja","sem","se","quem","tua","que","qualquer","porque","por","perante","pelos","pelo","outros","outro","outras","outra","os","o","nesse","nas","na","mesmos","mesmas","mesma","um","neste","menos","quais","mediante","proprio","logo","isto","isso","ha","estes","este","propios","estas","esta","todas","esses","essas","toda","entre","nos","entao","em","eles","qual","elas","tuas","ela","tudo","do","mesmo","diversas","todos","diversa","seus","dispoem","ou","dispoe","teus","deste","quer","desta","diversos","desde","quanto","depois","demais","quando","essa","deles","todo","pois","dele","dela","dos","de","da","nem","cujos","das","cujo","durante","cujas","portanto","cuja","contudo","ele","contra","como","com","pelas","assim","as","aqueles","mais","esse","aquele","mas","apos","aos","aonde","sua","e","ao","antes","nao","ambos","ambas","alem","ainda","a"]czech

["a","s","k","o","i","u","v","z","dnes","cz","tímto","bude?","budem","byli","jse?","muj","svym","ta","tomto","tohle","tuto","tyto","jej","zda","proc","máte","tato","kam","tohoto","kdo","kterí","mi","nám","tom","tomuto","mít","nic","proto","kterou","byla","toho","proto?e","asi","ho","na?i","napi?te","re","co?","tím","tak?e","svych","její","svymi","jste","aj","tu","tedy","teto","bylo","kde","ke","pravé","ji","nad","nejsou","ci","pod","téma","mezi","pres","ty","pak","vám","ani","kdy?","v?ak","neg","jsem","tento","clánku","clánky","aby","jsme","pred","pta","jejich","byl","je?te","a?","bez","také","pouze","první","va?e","která","nás","novy","tipy","pokud","mu?e","strana","jeho","své","jiné","zprávy","nové","není","vás","jen","podle","zde","u?","byt","více","bude","ji?","ne?","ktery","by","které","co","nebo","ten","tak","má","pri","od","po","jsou","jak","dal?í","ale","si","se","ve","to","jako","za","zpet","ze","do","pro","je","na","atd","atp","jakmile","pricem?","já","on","ona","ono","oni","ony","my","vy","jí","ji","me","mne","jemu","tomu","tem","temu","nemu","nemu?","jeho?","jí?","jeliko?","je?","jako?","nace?"]german

["wegen","mir","mich","dich","dir","ihre","wird","sein","auf","durch","ihres","ist","aus","von","im","war","mit","ohne","oder","kein","wie","was","es","sie","mein","er","du","da?","dass","die","als","ihr","wir","der","für","das","einen","wer","einem","am","und","eines","eine","in","einer"]greek

["ο","η","το","οι","τα","του","τησ","των","τον","την","και","κι","κ","ειμαι","εισαι","ειναι","ειμαστε","ειστε","στο","στον","στη","στην","μα","αλλα","απο","για","προσ","με","σε","ωσ","παρα","αντι","κατα","μετα","θα","να","δε","δεν","μη","μην","επι","ενω","εαν","αν","τοτε","που","πωσ","ποιοσ","ποια","ποιο","ποιοι","ποιεσ","ποιων","ποιουσ","αυτοσ","αυτη","αυτο","αυτοι","αυτων","αυτουσ","αυτεσ","αυτα","εκεινοσ","εκεινη","εκεινο","εκεινοι","εκεινεσ","εκεινα","εκεινων","εκεινουσ","οπωσ","ομωσ","ισωσ","οσο","οτι"]persian

["????","??????","?????","????","?????","??","??????","??????","???","??","????","???","??","??????","???","?????","????","???","????","?????","????","?????","????","????","??","?","??","??????","???","???","??","???","?","????","????","??","???","???","??????","??","????","???","???","????","???","?????","?????","???","?????","?????","?????","??????","???????","??????","???","?????","??","?????","???","????","?????","???","????","????","??????","???????","??","?????","????","????","?????","????","?????","????","????","???","????","???","????","???","?????","????","????","????","???","????","????","????","?????","????","???","??","????","???","????","?????","???","???","??????","???","??????","????","????","????????","??????","???","??","????","???","?????","????","?????","???","????","??????","?????","?????","??","??????","?????","???","????","????","???","??","??????","??","?????","???","??","?????","?????","?????","????","?????","?????","??","????","????","???","??","????","????","????","????","????","????","???","???","???","???","???","?????","??????","???","?????","????","???","???","?????","???","???????","???","????","?????","?????","???","????","????","????","????","?????","??","????","??","????","?????","????","???","????","??","????","???","?????","???","?????","????","?????","??","???","??","??","???","????","?????","?????","???","????","????","??????","?????","??","????","?????","?????","???","????","?????","??","?????","????","???","????","????","????","????","????????","???","????","??","????","???","???","?????","????","???","?????","???","????","??????","????","??????","????","??","????","??","????","??","????","????","???","???","???","???","????","????","???","??????","???","?????","????","???","????","???","??","?????","???","??","???","????","???","???","???","???","????","???","???","???","????","???","????","?????","???","????","??","??????","??","????","????","?????","??????","???","??????","??","???","??","??","????","????","??????","??","???","??????","????","???","?????","???","???","???","???","???","????","??","?????","???","????","?????","???"]french

["?","être","vu","vous","votre","un","tu","toute","tout","tous","toi","tiens","tes","suivant","soit","soi","sinon","siennes","si","se","sauf","s","quoi","vers","qui","quels","ton","quelle","quoique","quand","près","pourquoi","plus","à","pendant","partant","outre","on","nous","notre","nos","tienne","ses","non","qu","ni","ne","mêmes","même","moyennant","mon","moins","va","sur","moi","miens","proche","miennes","mienne","tien","mien","n","malgré","quelles","plein","mais","là","revoilà","lui","leurs","?","toutes","le","où","la","l","jusque","jusqu","ils","hélas","ou","hormis","laquelle","il","eu","n?tre","etc","est","environ","une","entre","en","son","elles","elle","dès","durant","duquel","été","du","voici","par","dont","donc","voilà","hors","doit","plusieurs","diverses","diverse","divers","devra","devers","tiennes","dessus","etre","dessous","desquels","desquelles","ès","et","désormais","des","te","pas","derrière","depuis","delà","hui","dehors","sans","dedans","debout","v?tre","de","dans","n?tres","mes","d","y","vos","je","concernant","comme","comment","combien","lorsque","ci","ta","n?nmoins","lequel","chez","contre","ceux","cette","j","cet","seront","que","ces","leur","certains","certaines","puisque","certaine","certain","passé","cependant","celui","lesquelles","celles","quel","celle","devant","cela","revoici","eux","ceci","sienne","merci","ce","c","siens","les","avoir","sous","avec","pour","parmi","avant","car","avait","sont","me","auxquels","sien","sa","excepté","auxquelles","aux","ma","autres","autre","aussi","auquel","aujourd","au","attendu","selon","après","ont","ainsi","ai","afin","v?tres","lesquels","a"]dutch

["andere","uw","niets","wil","na","tegen","ons","wordt","werd","hier","eens","onder","alles","zelf","hun","dus","kan","ben","meer","iets","me","veel","omdat","zal","nog","altijd","ja","want","u","zonder","deze","hebben","wie","zij","heeft","hoe","nu","heb","naar","worden","haar","daar","der","je","doch","moet","tot","uit","bij","geweest","kon","ge","zich","wezen","ze","al","zo","dit","waren","men","mijn","kunnen","wat","zou","dan","hem","om","maar","ook","er","had","voor","of","als","reeds","door","met","over","aan","mij","was","is","geen","zijn","niet","iemand","het","hij","een","toen","in","toch","die","dat","te","doen","ik","van","op","en","de"]russian

["а","без","более","бы","был","была","были","было","быть","в","вам","вас","весь","во","вот","все","всего","всех","вы","где","да","даже","для","до","его","ее","ей","ею","если","есть","еще","же","за","здесь","и","из","или","им","их","к","как","ко","когда","кто","ли","либо","мне","может","мы","на","надо","наш","не","него","нее","нет","ни","них","но","ну","о","об","однако","он","она","они","оно","от","очень","по","под","при","с","со","так","также","такой","там","те","тем","то","того","тоже","той","только","том","ты","у","уже","хотя","чего","чей","чем","что","чтобы","чье","чья","эта","эти","это","я"]