測試方案介紹

本文將為您介紹如何使用TPC-H(商業(yè)智能計(jì)算測試)對(duì)OLAP查詢場景和Key/Value點(diǎn)查場景進(jìn)行性能測試。

TPC-H簡介

以下文字描述引用自TPC Benchmark? H (TPC-H)規(guī)范:

TPC-H是一個(gè)決策支持基準(zhǔn),由一套面向業(yè)務(wù)的臨時(shí)查詢和并發(fā)數(shù)據(jù)修改組成。選擇的查詢和填充數(shù)據(jù)庫的數(shù)據(jù)具有廣泛的行業(yè)相關(guān)性。該基準(zhǔn)測試說明了決策支持系統(tǒng)可以檢查大量數(shù)據(jù),執(zhí)行高度復(fù)雜的查詢,并解答關(guān)鍵的業(yè)務(wù)問題。

詳情請參見TPCH Specification。

本文的TPC-H的實(shí)現(xiàn)基于TPC-H的基準(zhǔn)測試,并不能與已發(fā)布的TPC-H基準(zhǔn)測試結(jié)果相比較,本文中的測試并不符合TPC-H基準(zhǔn)測試的所有要求。

數(shù)據(jù)集簡介

TPC-H(商業(yè)智能計(jì)算測試)是美國交易處理效能委員會(huì)(TPC,Transaction Processing Performance Council)組織制定的用來模擬決策支持類應(yīng)用的一個(gè)測試集。目前在學(xué)術(shù)界和工業(yè)界普遍采用它來評(píng)價(jià)決策支持技術(shù)方面應(yīng)用的性能。

TPC-H是根據(jù)真實(shí)的生產(chǎn)運(yùn)行環(huán)境來建模,模擬了一套銷售系統(tǒng)的數(shù)據(jù)倉庫。其共包含8張表,數(shù)據(jù)量可設(shè)定從1 GB~3 TB不等。其基準(zhǔn)測試共包含了22個(gè)查詢,主要評(píng)價(jià)指標(biāo)各個(gè)查詢的響應(yīng)時(shí)間,即從提交查詢到結(jié)果返回所需時(shí)間。其測試結(jié)果可綜合反映系統(tǒng)處理查詢時(shí)的能力。詳情請參見TPC-H基準(zhǔn)。

場景說明

本測試場景主要包含如下內(nèi)容:

OLAP查詢場景測試,主要使用列存表,直接使用TPC-H測試中的22條查詢語句進(jìn)行測試。

Key/Value點(diǎn)查場景測試,主要使用行存表,針對(duì)ORDERS使用行存表后,進(jìn)行主鍵過濾的點(diǎn)查。

數(shù)據(jù)更新場景,主要用于測試OLAP引擎在有主鍵的情況下數(shù)據(jù)更新的性能。

測試數(shù)據(jù)量會(huì)直接影響測試結(jié)果,TPC-H的生成工具中使用SF(scale factor)控制生成數(shù)據(jù)量的大小,1 SF對(duì)應(yīng)1 GB。

以上的數(shù)據(jù)量僅針對(duì)原始數(shù)據(jù)的數(shù)據(jù)量,不包括索引等空間占用,因此在準(zhǔn)備環(huán)境時(shí),您需要預(yù)留更多的空間。

注意事項(xiàng)

為了減少可能對(duì)測試結(jié)果有影響的變量,建議每次新建實(shí)例進(jìn)行測試,請勿使用升或降配的實(shí)例。

OLAP查詢場景測試

準(zhǔn)備工作。

基礎(chǔ)環(huán)境準(zhǔn)備:您需要準(zhǔn)備OLAP查詢場景所需的基礎(chǔ)環(huán)境。

創(chuàng)建Hologres實(shí)例,詳情請參見購買Hologres。本次測試環(huán)境使用了獨(dú)享(按量付費(fèi))的實(shí)例,由于該實(shí)例僅用于測試使用,計(jì)算資源配置選擇96 核384 GB。您可以根據(jù)實(shí)際業(yè)務(wù)需求,選擇計(jì)算資源的規(guī)格。

創(chuàng)建ECS實(shí)例,詳情請參見創(chuàng)建ECS實(shí)例。本文使用的ECS實(shí)例規(guī)格如下:

參數(shù)

規(guī)格

實(shí)例規(guī)格

ecs.g6.4xlarge

鏡像

Alibaba Cloud Linux 3.2104 LTS 64位

數(shù)據(jù)盤

類型為ESSD云盤,具體數(shù)據(jù)容量需根據(jù)測試的數(shù)據(jù)量大小決定。

下載并配置Hologres Benchmark測試工具包。

登錄ECS實(shí)例,詳情請參見連接ECS實(shí)例。

安裝PSQL客戶端。

yum update -y yum install postgresql-server -y yum install postgresql-contrib -y下載Hologres Benchmark測試工具包并解壓。

wget https://oss-tpch.oss-cn-hangzhou.aliyuncs.com/hologres_benchmark.tar.gz tar xvf hologres_benchmark.tar.gz進(jìn)入Hologres Benchmark目錄。

cd hologres_benchmark執(zhí)行

vim group_vars/all命令,配置Benchmark所需參數(shù)。# db config login_host: "" login_user: "" login_password: "" login_port: "" # benchmark run cluster: hologres cluster: "hologres" RUN_MODE: "HOTRUN" # benchmark config scale_factor: 1 work_dir_root: /your/working_dir/benchmark/workdirs dataset_generate_root_path: /your/working_dir/benchmark/datasets參數(shù)介紹:

類型

參數(shù)

描述

Hologres服務(wù)連接參數(shù)

login_host

Hologres實(shí)例的指定VPC網(wǎng)絡(luò)域名。

您可以登錄管理控制臺(tái),進(jìn)入實(shí)例詳情頁,從網(wǎng)絡(luò)信息的域名列獲取指定VPC的域名。

說明此處域名不包含端口。例如:

hgpostcn-cn-nwy364b5v009-cn-shanghai-vpc-st.hologres.aliyuncs.comlogin_port

Hologres實(shí)例的指定VPC網(wǎng)絡(luò)端口。

您可以登錄管理控制臺(tái),進(jìn)入實(shí)例詳情頁,從網(wǎng)絡(luò)信息的域名列獲取指定VPC的域名的端口。

login_user

賬號(hào)的AccessKey ID。

您可以單擊AccessKey 管理,獲取AccessKey ID。

login_password

當(dāng)前賬號(hào)的AccessKey Secret。

Benchmark配置參數(shù)

scale_factor

數(shù)據(jù)集的比例因子,控制生成數(shù)據(jù)量的大小。默認(rèn)值為1,單位為:GB。

work_dir_root

工作目錄的根目錄,用于存放TPCH相關(guān)的建表語句、執(zhí)行SQL等數(shù)據(jù)。默認(rèn)值為

/your/working_dir/benchmark/workdirs。dataset_generate_root_path

存放生成測試數(shù)據(jù)集的路徑。默認(rèn)值為

/your/working_dir/benchmark/datasets。

執(zhí)行以下命令,進(jìn)行全流程自動(dòng)化TPC-H測試。

全流程自動(dòng)化進(jìn)行TPC-H測試,主要包括:生成數(shù)據(jù)、創(chuàng)建測試數(shù)據(jù)庫tpc_h_sf<scale_factor>,例如tpc_h_sf1000、創(chuàng)建表、導(dǎo)入數(shù)據(jù)。

bin/run_tpch.sh您還可以執(zhí)行以下命令,單獨(dú)執(zhí)行TPC-H查詢測試。

bin/run_tpch.sh query查看測試結(jié)果。

測試結(jié)果概覽。

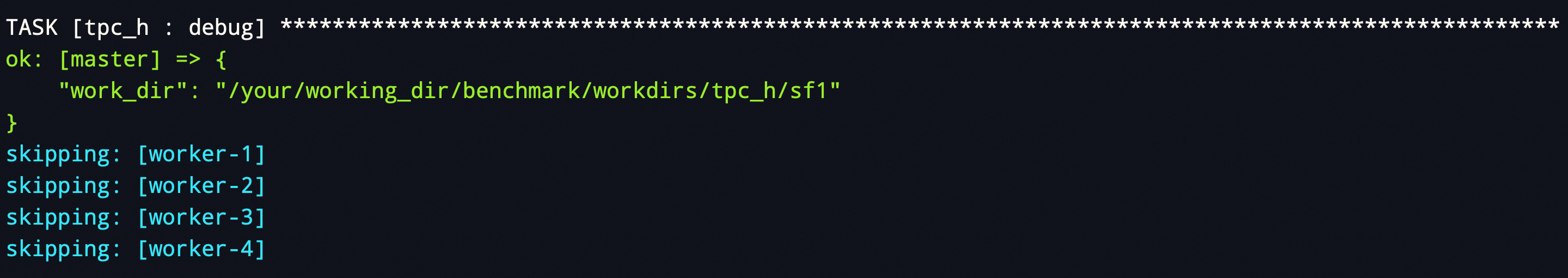

bin/run_tpch.sh命令執(zhí)行完后會(huì)直接輸出測試結(jié)果。測試結(jié)果類似下所示。TASK [tpc_h : debug] ************************************************************************************************** skipping: [worker-1] ok: [master] => { "command_output.stdout_lines": [ "[info] 2024-06-28 14:46:09.768 | Run sql queries started.", "[info] 2024-06-28 14:46:09.947 | Run q10.sql started.", "[info] 2024-06-28 14:46:10.088 | Run q10.sql finished. Time taken: 0:00:00, 138 ms", "[info] 2024-06-28 14:46:10.239 | Run q11.sql started.", "[info] 2024-06-28 14:46:10.396 | Run q11.sql finished. Time taken: 0:00:00, 154 ms", "[info] 2024-06-28 14:46:10.505 | Run q12.sql started.", "[info] 2024-06-28 14:46:10.592 | Run q12.sql finished. Time taken: 0:00:00, 85 ms", "[info] 2024-06-28 14:46:10.703 | Run q13.sql started.", "[info] 2024-06-28 14:46:10.793 | Run q13.sql finished. Time taken: 0:00:00, 88 ms", "[info] 2024-06-28 14:46:10.883 | Run q14.sql started.", "[info] 2024-06-28 14:46:10.981 | Run q14.sql finished. Time taken: 0:00:00, 95 ms", "[info] 2024-06-28 14:46:11.132 | Run q15.sql started.", "[info] 2024-06-28 14:46:11.266 | Run q15.sql finished. Time taken: 0:00:00, 131 ms", "[info] 2024-06-28 14:46:11.441 | Run q16.sql started.", "[info] 2024-06-28 14:46:11.609 | Run q16.sql finished. Time taken: 0:00:00, 165 ms", "[info] 2024-06-28 14:46:11.728 | Run q17.sql started.", "[info] 2024-06-28 14:46:11.818 | Run q17.sql finished. Time taken: 0:00:00, 88 ms", "[info] 2024-06-28 14:46:12.017 | Run q18.sql started.", "[info] 2024-06-28 14:46:12.184 | Run q18.sql finished. Time taken: 0:00:00, 164 ms", "[info] 2024-06-28 14:46:12.287 | Run q19.sql started.", "[info] 2024-06-28 14:46:12.388 | Run q19.sql finished. Time taken: 0:00:00, 98 ms", "[info] 2024-06-28 14:46:12.503 | Run q1.sql started.", "[info] 2024-06-28 14:46:12.597 | Run q1.sql finished. Time taken: 0:00:00, 93 ms", "[info] 2024-06-28 14:46:12.732 | Run q20.sql started.", "[info] 2024-06-28 14:46:12.888 | Run q20.sql finished. Time taken: 0:00:00, 154 ms", "[info] 2024-06-28 14:46:13.184 | Run q21.sql started.", "[info] 2024-06-28 14:46:13.456 | Run q21.sql finished. Time taken: 0:00:00, 269 ms", "[info] 2024-06-28 14:46:13.558 | Run q22.sql started.", "[info] 2024-06-28 14:46:13.657 | Run q22.sql finished. Time taken: 0:00:00, 97 ms", "[info] 2024-06-28 14:46:13.796 | Run q2.sql started.", "[info] 2024-06-28 14:46:13.935 | Run q2.sql finished. Time taken: 0:00:00, 136 ms", "[info] 2024-06-28 14:46:14.051 | Run q3.sql started.", "[info] 2024-06-28 14:46:14.155 | Run q3.sql finished. Time taken: 0:00:00, 101 ms", "[info] 2024-06-28 14:46:14.255 | Run q4.sql started.", "[info] 2024-06-28 14:46:14.341 | Run q4.sql finished. Time taken: 0:00:00, 83 ms", "[info] 2024-06-28 14:46:14.567 | Run q5.sql started.", "[info] 2024-06-28 14:46:14.799 | Run q5.sql finished. Time taken: 0:00:00, 230 ms", "[info] 2024-06-28 14:46:14.881 | Run q6.sql started.", "[info] 2024-06-28 14:46:14.950 | Run q6.sql finished. Time taken: 0:00:00, 67 ms", "[info] 2024-06-28 14:46:15.138 | Run q7.sql started.", "[info] 2024-06-28 14:46:15.320 | Run q7.sql finished. Time taken: 0:00:00, 180 ms", "[info] 2024-06-28 14:46:15.572 | Run q8.sql started.", "[info] 2024-06-28 14:46:15.831 | Run q8.sql finished. Time taken: 0:00:00, 256 ms", "[info] 2024-06-28 14:46:16.081 | Run q9.sql started.", "[info] 2024-06-28 14:46:16.322 | Run q9.sql finished. Time taken: 0:00:00, 238 ms", "[info] 2024-06-28 14:46:16.325 | ----------- HOT RUN finished. Time taken: 3255 mill_sec -----------------" ] } skipping: [worker-2] skipping: [worker-3] skipping: [worker-4] TASK [tpc_h : clear Env] ********************************************************************************************** skipping: [worker-1] skipping: [worker-2] skipping: [worker-3] skipping: [worker-4] ok: [master] TASK [tpc_h : debug] ************************************************************************************************** ok: [master] => { "work_dir": "/your/working_dir/benchmark/workdirs/tpc_h/sf1" } skipping: [worker-1] skipping: [worker-2] skipping: [worker-3] skipping: [worker-4]測試結(jié)果詳情。

成功執(zhí)行

bin/run_tpch.sh命令后,系統(tǒng)會(huì)構(gòu)建好整個(gè)TPC-H測試的工作目錄并輸出<work_dir>目錄的路徑信息,您可以切換到該路徑下查看相關(guān)的Query語句、建表語句和執(zhí)行日志等信息。如下圖所示。

您可以通過命令

cd <work_dir>/logs進(jìn)入工作目錄的Logs下,查看測試結(jié)果以及執(zhí)行SQL的詳細(xì)結(jié)果。<work_dir>的目錄結(jié)果如下所示。working_dir/ `-- benchmark |-- datasets | `-- tpc_h | `-- sf1 | |-- worker-1 | | |-- customer.tbl | | `-- lineitem.tbl | |-- worker-2 | | |-- orders.tbl | | `-- supplier.tbl | |-- worker-3 | | |-- nation.tbl | | `-- partsupp.tbl | `-- worker-4 | |-- part.tbl | `-- region.tbl `-- workdirs `-- tpc_h `-- sf1 |-- config |-- hologres | |-- logs | | |-- q10.sql.err | | |-- q10.sql.out | | |-- q11.sql.err | | |-- q11.sql.out | | |-- q12.sql.err | | |-- q12.sql.out | | |-- q13.sql.err | | |-- q13.sql.out | | |-- q14.sql.err | | |-- q14.sql.out | | |-- q15.sql.err | | |-- q15.sql.out | | |-- q16.sql.err | | |-- q16.sql.out | | |-- q17.sql.err | | |-- q17.sql.out | | |-- q18.sql.err | | |-- q18.sql.out | | |-- q19.sql.err | | |-- q19.sql.out | | |-- q1.sql.err | | |-- q1.sql.out | | |-- q20.sql.err | | |-- q20.sql.out | | |-- q21.sql.err | | |-- q21.sql.out | | |-- q22.sql.err | | |-- q22.sql.out | | |-- q2.sql.err | | |-- q2.sql.out | | |-- q3.sql.err | | |-- q3.sql.out | | |-- q4.sql.err | | |-- q4.sql.out | | |-- q5.sql.err | | |-- q5.sql.out | | |-- q6.sql.err | | |-- q6.sql.out | | |-- q7.sql.err | | |-- q7.sql.out | | |-- q8.sql.err | | |-- q8.sql.out | | |-- q9.sql.err | | |-- q9.sql.out | | `-- run.log | `-- logs-20240628144609 | |-- q10.sql.err | |-- q10.sql.out | |-- q11.sql.err | |-- q11.sql.out | |-- q12.sql.err | |-- q12.sql.out | |-- q13.sql.err | |-- q13.sql.out | |-- q14.sql.err | |-- q14.sql.out | |-- q15.sql.err | |-- q15.sql.out | |-- q16.sql.err | |-- q16.sql.out | |-- q17.sql.err | |-- q17.sql.out | |-- q18.sql.err | |-- q18.sql.out | |-- q19.sql.err | |-- q19.sql.out | |-- q1.sql.err | |-- q1.sql.out | |-- q20.sql.err | |-- q20.sql.out | |-- q21.sql.err | |-- q21.sql.out | |-- q22.sql.err | |-- q22.sql.out | |-- q2.sql.err | |-- q2.sql.out | |-- q3.sql.err | |-- q3.sql.out | |-- q4.sql.err | |-- q4.sql.out | |-- q5.sql.err | |-- q5.sql.out | |-- q6.sql.err | |-- q6.sql.out | |-- q7.sql.err | |-- q7.sql.out | |-- q8.sql.err | |-- q8.sql.out | |-- q9.sql.err | |-- q9.sql.out | `-- run.log |-- queries | |-- ddl | | |-- hologres_analyze_tables.sql | | `-- hologres_create_tables.sql | |-- q10.sql | |-- q11.sql | |-- q12.sql | |-- q13.sql | |-- q14.sql | |-- q15.sql | |-- q16.sql | |-- q17.sql | |-- q18.sql | |-- q19.sql | |-- q1.sql | |-- q20.sql | |-- q21.sql | |-- q22.sql | |-- q2.sql | |-- q3.sql | |-- q4.sql | |-- q5.sql | |-- q6.sql | |-- q7.sql | |-- q8.sql | `-- q9.sql |-- run_hologres.sh |-- run_mysql.sh |-- run.sh `-- tpch_tools |-- dbgen |-- qgen `-- resouces |-- dists.dss `-- queries |-- 10.sql |-- 11.sql |-- 12.sql |-- 13.sql |-- 14.sql |-- 15.sql |-- 16.sql |-- 17.sql |-- 18.sql |-- 19.sql |-- 1.sql |-- 20.sql |-- 21.sql |-- 22.sql |-- 2.sql |-- 3.sql |-- 4.sql |-- 5.sql |-- 6.sql |-- 7.sql |-- 8.sql `-- 9.sql

Key/Value點(diǎn)查場景測試

Key/Value點(diǎn)查場景測試,可繼續(xù)使用OLAP查詢場景測試創(chuàng)建的數(shù)據(jù)庫hologres_tpch以及orders表進(jìn)行Key/Value點(diǎn)查場景測試。具體步驟如下:

創(chuàng)建表

由于Key/Value點(diǎn)查場景使用行存表,因此不能直接使用OLAP查詢場景測試中的order表,需新建表。您可使用PSQL客戶端連接Hologres,并運(yùn)行如下命令新建orders_row表。

說明PSQL客戶端連接Hologres,詳情請參見連接Hologres并開發(fā)。

DROP TABLE IF EXISTS public.orders_row; BEGIN; CREATE TABLE public.orders_row( O_ORDERKEY BIGINT NOT NULL PRIMARY KEY ,O_CUSTKEY INT NOT NULL ,O_ORDERSTATUS TEXT NOT NULL ,O_TOTALPRICE DECIMAL(15,2) NOT NULL ,O_ORDERDATE TIMESTAMPTZ NOT NULL ,O_ORDERPRIORITY TEXT NOT NULL ,O_CLERK TEXT NOT NULL ,O_SHIPPRIORITY INT NOT NULL ,O_COMMENT TEXT NOT NULL ); CALL SET_TABLE_PROPERTY('public.orders_row', 'orientation', 'row'); CALL SET_TABLE_PROPERTY('public.orders_row', 'clustering_key', 'o_orderkey'); CALL SET_TABLE_PROPERTY('public.orders_row', 'distribution_key', 'o_orderkey'); COMMIT;導(dǎo)入數(shù)據(jù)

您可以使用如下INSERT INTO語句,將TPC-H數(shù)據(jù)集中的orders表數(shù)據(jù)導(dǎo)入至orders_row表。

說明Hologres從V2.1.17版本起支持Serverless Computing能力,針對(duì)大數(shù)據(jù)量離線導(dǎo)入、大型ETL作業(yè)、外表大數(shù)據(jù)量查詢等場景,使用Serverless Computing執(zhí)行該類任務(wù)可以直接使用額外的Serverless資源,避免使用實(shí)例自身資源,無需為實(shí)例預(yù)留額外的計(jì)算資源,顯著提升實(shí)例穩(wěn)定性、減少OOM概率,且僅需為任務(wù)單獨(dú)付費(fèi)。Serverless Computing詳情請參見Serverless Computing概述,Serverless Computing使用方法請參見Serverless Computing使用指南。

-- (可選)推薦使用Serverless Computing執(zhí)行大數(shù)據(jù)量離線導(dǎo)入和ETL作業(yè) SET hg_computing_resource = 'serverless'; INSERT INTO public.orders_row SELECT * FROM public.orders; -- 重置配置,保證非必要的SQL不會(huì)使用serverless資源。 RESET hg_computing_resource;執(zhí)行查詢

生成查詢語句。

Key/Value點(diǎn)查場景主要有兩種查詢場景,具體查詢語句如下:

查詢方式

查詢語句

說明

單值篩選

SELECT column_a ,column_b ,... ,column_x FROM table_x WHERE pk = value_x ;此查詢語句主要用于單值篩選,即

WHERE的SQL語句取值唯一。多值篩選

SELECT column_a ,column_b ,... ,column_x FROM table_x WHERE pk IN ( value_a, value_b,..., value_x ) ;此查詢語句主要用于多值篩選,即

WHERE的SQL語句可以取多個(gè)值。您可以使用如下腳本生成所需的SQL語句。

rm -rf kv_query mkdir kv_query cd kv_query echo " \set column_values random(1,99999999) select O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_TOTALPRICE,O_ORDERDATE,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT from public.orders_row WHERE o_orderkey =:column_values; " >> kv_query_single.sql echo " \set column_values1 random(1,99999999) \set column_values2 random(1,99999999) \set column_values3 random(1,99999999) \set column_values4 random(1,99999999) \set column_values5 random(1,99999999) \set column_values6 random(1,99999999) \set column_values7 random(1,99999999) \set column_values8 random(1,99999999) \set column_values9 random(1,99999999) select O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_TOTALPRICE,O_ORDERDATE,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT from public.orders_row WHERE o_orderkey in(:column_values1,:column_values2,:column_values3,:column_values4,:column_values5,:column_values6,:column_values7,:column_values8,:column_values9); " >> kv_query_in.sql腳本執(zhí)行完成后,會(huì)生成2個(gè)SQL文件:

kv_query_single.sql表示單值篩選的SQL。kv_query_in.sql表示多值篩選的SQL,該腳本會(huì)隨機(jī)生成一個(gè)針對(duì)10個(gè)值篩選的SQL。

為了方便統(tǒng)計(jì)查詢信息,您需要使用pgbench工具。您可以使用如下命令安裝pgbench工具。

yum install postgresql-contrib -y為了避免因工具兼容性問題影響測試,建議您安裝版本為13及以上的pgbench工具。如果您本地已經(jīng)安裝pgbench工具,請確保其版本為9.6以上。您可以通過執(zhí)行如下命令查看當(dāng)前工具版本。

pgbench --version執(zhí)行測試語句。

說明以下命令需在生成查詢語句的目錄下執(zhí)行。

針對(duì)單值篩選的場景,使用pgbench工具進(jìn)行壓測。

PGUSER=<AccessKey_ID> PGPASSWORD=<AccessKey_Secret> PGDATABASE=<Database> pgbench -h <Endpoint> -p <Port> -c <Client_Num> -T <Query_Seconds> -M prepared -n -f kv_query_single.sql針對(duì)多值篩選的場景,使用pgbench工具進(jìn)行壓測。

PGUSER=<AccessKey_ID> PGPASSWORD=<AccessKey_Secret> PGDATABASE=<Database> pgbench -h <Endpoint> -p <Port> -c <Client_Num> -T <Query_Seconds> -M prepared -n -f kv_query_in.sql

參數(shù)說明如下表所示。

參數(shù)

說明

AccessKey_ID

當(dāng)前阿里云賬號(hào)的AccessKey ID。

您可以單擊AccessKey 管理,獲取AccessKey ID。

AccessKey_Secret

當(dāng)前阿里云賬號(hào)的AccessKey Secret。

您可以單擊AccessKey 管理,獲取AccessKey Secret。

Database

Hologres的數(shù)據(jù)庫名稱。

開通Hologres實(shí)例后,系統(tǒng)自動(dòng)創(chuàng)建postgres數(shù)據(jù)庫。

您可以使用postgres數(shù)據(jù)庫鏈接Hologres,但是該數(shù)據(jù)庫分配到的資源較少,開發(fā)實(shí)際業(yè)務(wù)建議您新建數(shù)據(jù)庫。詳情請參見創(chuàng)建數(shù)據(jù)庫。

Endpoint

Hologres實(shí)例的網(wǎng)絡(luò)地址(Endpoint)。

您可以進(jìn)入Hologres管理控制臺(tái)的實(shí)例詳情頁,從網(wǎng)絡(luò)信息區(qū)域獲取網(wǎng)絡(luò)地址。

Port

Hologres實(shí)例的網(wǎng)絡(luò)端口。

進(jìn)入Hologres管理控制臺(tái)的實(shí)例詳情頁獲取網(wǎng)絡(luò)端口。

Client_Num

客戶端數(shù)目,即并發(fā)度。

例如,本文由于該測試僅測試查詢性能,不測試并發(fā),將并發(fā)度置為1即可。

Query_Seconds

每個(gè)客戶端需要執(zhí)行的每個(gè)Query的壓測時(shí)長(Query_Seconds,秒)。例如,本文該參數(shù)取值為300。

數(shù)據(jù)更新場景

該場景用于測試OLAP引擎在有主鍵情況下數(shù)據(jù)更新的性能,以及在主鍵沖突時(shí)更新整行數(shù)據(jù)。

生成查詢

echo " \set O_ORDERKEY random(1,99999999) INSERT INTO public.orders_row(o_orderkey,o_custkey,o_orderstatus,o_totalprice,o_orderdate,o_orderpriority,o_clerk,o_shippriority,o_comment) VALUES (:O_ORDERKEY,1,'demo',1.1,'2021-01-01','demo','demo',1,'demo') on conflict(o_orderkey) do update set (o_orderkey,o_custkey,o_orderstatus,o_totalprice,o_orderdate,o_orderpriority,o_clerk,o_shippriority,o_comment)= ROW(excluded.*); " > /root/insert_on_conflict.sql插入及更新,更對(duì)參數(shù)說明請參見參數(shù)說明。

PGUSER=<AccessKey_ID> PGPASSWORD=<AccessKey_Secret> PGDATABASE=<Database> pgbench -h <Endpoint> -p <Port> -c <Client_Num> -T <Query_Seconds> -M prepared -n -f /root/insert_on_conflict.sql示例結(jié)果

transaction type: Custom query scaling factor: 1 query mode: prepared number of clients: 249 number of threads: 1 duration: 60 s number of transactions actually processed: 1923038 tps = 32005.850214 (including connections establishing) tps = 36403.145722 (excluding connections establishing)

Flink實(shí)時(shí)寫入場景

該場景用于測試實(shí)時(shí)數(shù)據(jù)寫入能力。

Hologres DDL

該場景Hologres的表擁有10列,其中

key列為主鍵,Hologres DDL如下。DROP TABLE IF EXISTS flink_insert; BEGIN ; CREATE TABLE IF NOT EXISTS flink_insert( key INT PRIMARY KEY ,value1 TEXT ,value2 TEXT ,value3 TEXT ,value4 TEXT ,value5 TEXT ,value6 TEXT ,value7 TEXT ,value8 TEXT ,value9 TEXT ); CALL SET_TABLE_PROPERTY('flink_insert', 'orientation', 'row'); CALL SET_TABLE_PROPERTY('flink_insert', 'clustering_key', 'key'); CALL SET_TABLE_PROPERTY('flink_insert', 'distribution_key', 'key'); COMMIT;Flink作業(yè)腳本

使用Flink全托管自帶的隨機(jī)數(shù)發(fā)生器向Hologres寫入數(shù)據(jù),當(dāng)主鍵沖突時(shí)選擇整行更新,單行數(shù)據(jù)量超過512 B,F(xiàn)link作業(yè)腳本如下。

CREATE TEMPORARY TABLE flink_case_1_source ( key INT, value1 VARCHAR, value2 VARCHAR, value3 VARCHAR, value4 VARCHAR, value5 VARCHAR, value6 VARCHAR, value7 VARCHAR, value8 VARCHAR, value9 VARCHAR ) WITH ( 'connector' = 'datagen', -- optional options -- 'rows-per-second' = '1000000000', 'fields.key.min'='1', 'fields.key.max'='2147483647', 'fields.value1.length' = '57', 'fields.value2.length' = '57', 'fields.value3.length' = '57', 'fields.value4.length' = '57', 'fields.value5.length' = '57', 'fields.value6.length' = '57', 'fields.value7.length' = '57', 'fields.value8.length' = '57', 'fields.value9.length' = '57' ); -- 創(chuàng)建 Hologres 結(jié)果表 CREATE TEMPORARY TABLE flink_case_1_sink ( key INT, value1 VARCHAR, value2 VARCHAR, value3 VARCHAR, value4 VARCHAR, value5 VARCHAR, value6 VARCHAR, value7 VARCHAR, value8 VARCHAR, value9 VARCHAR ) WITH ( 'connector' = 'hologres', 'dbname'='<yourDbname>', --Hologres的數(shù)據(jù)庫名稱。 'tablename'='<yourTablename>', --Hologres用于接收數(shù)據(jù)的表名稱。 'username'='<yourUsername>', --當(dāng)前阿里云賬號(hào)的AccessKey ID。 'password'='<yourPassword>', --當(dāng)前阿里云賬號(hào)的AccessKey Secret。 'endpoint'='<yourEndpoint>', --當(dāng)前Hologres實(shí)例VPC網(wǎng)絡(luò)的Endpoint。 'connectionSize' = '10', --默認(rèn)為3 'jdbcWriteBatchSize' = '1024', --默認(rèn)為256 'jdbcWriteBatchByteSize' = '2147483647', --默認(rèn)為20971520 'mutatetype'='insertorreplace' --插入或整行替換已有數(shù)據(jù) ); -- 進(jìn)行 ETL 操作并寫入數(shù)據(jù) insert into flink_case_1_sink select key, value1, value2, value3, value4, value5, value6, value7, value8, value9 from flink_case_1_source ;參數(shù)說明請參見Hologres結(jié)果表。

示例結(jié)果

在Hologres的管理控制臺(tái)的監(jiān)控信息頁面,即可看到RPS的數(shù)值。

TPC-H 22條查詢語句

TPCH 22條查詢語句如下所示,您可以單擊表格中的鏈接進(jìn)行查看。

名稱 | 查詢語句 | |||

TPCH 22條查詢語句 | ||||

- | - | |||

Q1

select l_returnflag, l_linestatus, sum(l_quantity) as sum_qty, sum(l_extendedprice) as sum_base_price, sum(l_extendedprice * (1 - l_discount)) as sum_disc_price, sum(l_extendedprice * (1 - l_discount) * (1 + l_tax)) as sum_charge, avg(l_quantity) as avg_qty, avg(l_extendedprice) as avg_price, avg(l_discount) as avg_disc, count(*) as count_order from lineitem where l_shipdate <= date '1998-12-01' - interval '120' day group by l_returnflag, l_linestatus order by l_returnflag, l_linestatus;Q2

select s_acctbal, s_name, n_name, p_partkey, p_mfgr, s_address, s_phone, s_comment from part, supplier, partsupp, nation, region where p_partkey = ps_partkey and s_suppkey = ps_suppkey and p_size = 48 and p_type like '%STEEL' and s_nationkey = n_nationkey and n_regionkey = r_regionkey and r_name = 'EUROPE' and ps_supplycost = ( select min(ps_supplycost) from partsupp, supplier, nation, region where p_partkey = ps_partkey and s_suppkey = ps_suppkey and s_nationkey = n_nationkey and n_regionkey = r_regionkey and r_name = 'EUROPE' ) order by s_acctbal desc, n_name, s_name, p_partkey limit 100;Q3

select l_orderkey, sum(l_extendedprice * (1 - l_discount)) as revenue, o_orderdate, o_shippriority from customer, orders, lineitem where c_mktsegment = 'MACHINERY' and c_custkey = o_custkey and l_orderkey = o_orderkey and o_orderdate < date '1995-03-23' and l_shipdate > date '1995-03-23' group by l_orderkey, o_orderdate, o_shippriority order by revenue desc, o_orderdate limit 10;Q4

select o_orderpriority, count(*) as order_count from orders where o_orderdate >= date '1996-07-01' and o_orderdate < date '1996-07-01' + interval '3' month and exists ( select * from lineitem where l_orderkey = o_orderkey and l_commitdate < l_receiptdate ) group by o_orderpriority order by o_orderpriority;Q5

select n_name, sum(l_extendedprice * (1 - l_discount)) as revenue from customer, orders, lineitem, supplier, nation, region where c_custkey = o_custkey and l_orderkey = o_orderkey and l_suppkey = s_suppkey and c_nationkey = s_nationkey and s_nationkey = n_nationkey and n_regionkey = r_regionkey and r_name = 'EUROPE' and o_orderdate >= date '1996-01-01' and o_orderdate < date '1996-01-01' + interval '1' year group by n_name order by revenue desc;Q6

select sum(l_extendedprice * l_discount) as revenue from lineitem where l_shipdate >= date '1996-01-01' and l_shipdate < date '1996-01-01' + interval '1' year and l_discount between 0.02 - 0.01 and 0.02 + 0.01 and l_quantity < 24;Q7

select supp_nation, cust_nation, l_year, sum(volume) as revenue from ( select n1.n_name as supp_nation, n2.n_name as cust_nation, extract(year from l_shipdate) as l_year, l_extendedprice * (1 - l_discount) as volume from supplier, lineitem, orders, customer, nation n1, nation n2 where s_suppkey = l_suppkey and o_orderkey = l_orderkey and c_custkey = o_custkey and s_nationkey = n1.n_nationkey and c_nationkey = n2.n_nationkey and ( (n1.n_name = 'CANADA' and n2.n_name = 'BRAZIL') or (n1.n_name = 'BRAZIL' and n2.n_name = 'CANADA') ) and l_shipdate between date '1995-01-01' and date '1996-12-31' ) as shipping group by supp_nation, cust_nation, l_year order by supp_nation, cust_nation, l_year;Q8

select o_year, sum(case when nation = 'BRAZIL' then volume else 0 end) / sum(volume) as mkt_share from ( select extract(year from o_orderdate) as o_year, l_extendedprice * (1 - l_discount) as volume, n2.n_name as nation from part, supplier, lineitem, orders, customer, nation n1, nation n2, region where p_partkey = l_partkey and s_suppkey = l_suppkey and l_orderkey = o_orderkey and o_custkey = c_custkey and c_nationkey = n1.n_nationkey and n1.n_regionkey = r_regionkey and r_name = 'AMERICA' and s_nationkey = n2.n_nationkey and o_orderdate between date '1995-01-01' and date '1996-12-31' and p_type = 'LARGE ANODIZED COPPER' ) as all_nations group by o_year order by o_year;Q9

select nation, o_year, sum(amount) as sum_profit from ( select n_name as nation, extract(year from o_orderdate) as o_year, l_extendedprice * (1 - l_discount) - ps_supplycost * l_quantity as amount from part, supplier, lineitem, partsupp, orders, nation where s_suppkey = l_suppkey and ps_suppkey = l_suppkey and ps_partkey = l_partkey and p_partkey = l_partkey and o_orderkey = l_orderkey and s_nationkey = n_nationkey and p_name like '%maroon%' ) as profit group by nation, o_year order by nation, o_year desc;Q10

select c_custkey, c_name, sum(l_extendedprice * (1 - l_discount)) as revenue, c_acctbal, n_name, c_address, c_phone, c_comment from customer, orders, lineitem, nation where c_custkey = o_custkey and l_orderkey = o_orderkey and o_orderdate >= date '1993-02-01' and o_orderdate < date '1993-02-01' + interval '3' month and l_returnflag = 'R' and c_nationkey = n_nationkey group by c_custkey, c_name, c_acctbal, c_phone, n_name, c_address, c_comment order by revenue desc limit 20;Q11

select ps_partkey, sum(ps_supplycost * ps_availqty) as value from partsupp, supplier, nation where ps_suppkey = s_suppkey and s_nationkey = n_nationkey and n_name = 'EGYPT' group by ps_partkey having sum(ps_supplycost * ps_availqty) > ( select sum(ps_supplycost * ps_availqty) * 0.0001000000 from partsupp, supplier, nation where ps_suppkey = s_suppkey and s_nationkey = n_nationkey and n_name = 'EGYPT' ) order by value desc;Q12

select l_shipmode, sum(case when o_orderpriority = '1-URGENT' or o_orderpriority = '2-HIGH' then 1 else 0 end) as high_line_count, sum(case when o_orderpriority <> '1-URGENT' and o_orderpriority <> '2-HIGH' then 1 else 0 end) as low_line_count from orders, lineitem where o_orderkey = l_orderkey and l_shipmode in ('FOB', 'AIR') and l_commitdate < l_receiptdate and l_shipdate < l_commitdate and l_receiptdate >= date '1997-01-01' and l_receiptdate < date '1997-01-01' + interval '1' year group by l_shipmode order by l_shipmode;Q13

select c_count, count(*) as custdist from ( select c_custkey, count(o_orderkey) as c_count from customer left outer join orders on c_custkey = o_custkey and o_comment not like '%special%deposits%' group by c_custkey ) c_orders group by c_count order by custdist desc, c_count desc;Q14

select 100.00 * sum(case when p_type like 'PROMO%' then l_extendedprice * (1 - l_discount) else 0 end) / sum(l_extendedprice * (1 - l_discount)) as promo_revenue from lineitem, part where l_partkey = p_partkey and l_shipdate >= date '1997-06-01' and l_shipdate < date '1997-06-01' + interval '1' month;Q15

with revenue0(SUPPLIER_NO, TOTAL_REVENUE) as ( select l_suppkey, sum(l_extendedprice * (1 - l_discount)) from lineitem where l_shipdate >= date '1995-02-01' and l_shipdate < date '1995-02-01' + interval '3' month group by l_suppkey ) select s_suppkey, s_name, s_address, s_phone, total_revenue from supplier, revenue0 where s_suppkey = supplier_no and total_revenue = ( select max(total_revenue) from revenue0 ) order by s_suppkey;Q16

select p_brand, p_type, p_size, count(distinct ps_suppkey) as supplier_cnt from partsupp, part where p_partkey = ps_partkey and p_brand <> 'Brand#45' and p_type not like 'SMALL ANODIZED%' and p_size in (47, 15, 37, 30, 46, 16, 18, 6) and ps_suppkey not in ( select s_suppkey from supplier where s_comment like '%Customer%Complaints%' ) group by p_brand, p_type, p_size order by supplier_cnt desc, p_brand, p_type, p_size;Q17

select sum(l_extendedprice) / 7.0 as avg_yearly from lineitem, part where p_partkey = l_partkey and p_brand = 'Brand#51' and p_container = 'WRAP PACK' and l_quantity < ( select 0.2 * avg(l_quantity) from lineitem where l_partkey = p_partkey );Q18

select c_name, c_custkey, o_orderkey, o_orderdate, o_totalprice, sum(l_quantity) from customer, orders, lineitem where o_orderkey in ( select l_orderkey from lineitem group by l_orderkey having sum(l_quantity) > 312 ) and c_custkey = o_custkey and o_orderkey = l_orderkey group by c_name, c_custkey, o_orderkey, o_orderdate, o_totalprice order by o_totalprice desc, o_orderdate limit 100;Q19

select sum(l_extendedprice* (1 - l_discount)) as revenue from lineitem, part where ( p_partkey = l_partkey and p_brand = 'Brand#52' and p_container in ('SM CASE', 'SM BOX', 'SM PACK', 'SM PKG') and l_quantity >= 3 and l_quantity <= 3 + 10 and p_size between 1 and 5 and l_shipmode in ('AIR', 'AIR REG') and l_shipinstruct = 'DELIVER IN PERSON' ) or ( p_partkey = l_partkey and p_brand = 'Brand#43' and p_container in ('MED BAG', 'MED BOX', 'MED PKG', 'MED PACK') and l_quantity >= 12 and l_quantity <= 12 + 10 and p_size between 1 and 10 and l_shipmode in ('AIR', 'AIR REG') and l_shipinstruct = 'DELIVER IN PERSON' ) or ( p_partkey = l_partkey and p_brand = 'Brand#52' and p_container in ('LG CASE', 'LG BOX', 'LG PACK', 'LG PKG') and l_quantity >= 21 and l_quantity <= 21 + 10 and p_size between 1 and 15 and l_shipmode in ('AIR', 'AIR REG') and l_shipinstruct = 'DELIVER IN PERSON' );Q20

select s_name, s_address from supplier, nation where s_suppkey in ( select ps_suppkey from partsupp where ps_partkey in ( select p_partkey from part where p_name like 'drab%' ) and ps_availqty > ( select 0.5 * sum(l_quantity) from lineitem where l_partkey = ps_partkey and l_suppkey = ps_suppkey and l_shipdate >= date '1996-01-01' and l_shipdate < date '1996-01-01' + interval '1' year ) ) and s_nationkey = n_nationkey and n_name = 'KENYA' order by s_name;Q21

select s_name, count(*) as numwait from supplier, lineitem l1, orders, nation where s_suppkey = l1.l_suppkey and o_orderkey = l1.l_orderkey and o_orderstatus = 'F' and l1.l_receiptdate > l1.l_commitdate and exists ( select * from lineitem l2 where l2.l_orderkey = l1.l_orderkey and l2.l_suppkey <> l1.l_suppkey ) and not exists ( select * from lineitem l3 where l3.l_orderkey = l1.l_orderkey and l3.l_suppkey <> l1.l_suppkey and l3.l_receiptdate > l3.l_commitdate ) and s_nationkey = n_nationkey and n_name = 'PERU' group by s_name order by numwait desc, s_name limit 100;Q22

select cntrycode, count(*) as numcust, sum(c_acctbal) as totacctbal from ( select substring(c_phone from 1 for 2) as cntrycode, c_acctbal from customer where substring(c_phone from 1 for 2) in ('24', '32', '17', '18', '12', '14', '22') and c_acctbal > ( select avg(c_acctbal) from customer where c_acctbal > 0.00 and substring(c_phone from 1 for 2) in ('24', '32', '17', '18', '12', '14', '22') ) and not exists ( select * from orders where o_custkey = c_custkey ) ) as custsale group by cntrycode order by cntrycode;