使用由本地盤機型(i系列和d系列)構建的E-MapReduce(簡稱EMR)集群時,您可能會收到本地盤受損事件的通知。本文為您介紹如何更換集群中損壞的本地盤。

注意事項

建議您使用縮減異常節點并增加新節點的方法來解決此類問題,以避免對業務運行造成長時間的影響。

磁盤更換后,該磁盤上的數據會丟失,請確保磁盤上的數據有足夠的副本,并及時備份。

整個換盤包括服務停止、卸載磁盤、掛載新盤和服務重啟等操作,磁盤的更換通常在五個工作日內完成。執行本文檔前請評估服務停止以后,服務的磁盤水位以及集群負載能否承載當前的業務。

操作步驟

您可以登錄ECS控制臺,查看事件具體信息,包括實例ID、狀態、受損磁盤ID、事件進度和相關的操作。

步驟一:獲取損壞的磁盤信息

通過SSH方式登錄壞盤所在節點,詳情請參見登錄集群。

執行以下命令,查看塊設備信息。

lsblk返回如下類似信息。

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vdd 254:48 0 5.4T 0 disk /mnt/disk3 vdb 254:16 0 5.4T 0 disk /mnt/disk1 vde 254:64 0 5.4T 0 disk /mnt/disk4 vdc 254:32 0 5.4T 0 disk /mnt/disk2 vda 254:0 0 120G 0 disk └─vda1 254:1 0 120G 0 part /執行以下命令,查看磁盤信息。

sudo fdisk -l返回如下類似信息。

Disk /dev/vdd: 5905.6 GB, 5905580032000 bytes, 11534336000 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes根據前面兩個步驟的返回信息記錄設備名

$device_name和掛載點$mount_path。例如,壞盤事件中的設備為vdd,獲取到的設備名為/dev/vdd,掛載點為/mnt/disk3。

步驟二:隔離損壞的本地盤

停止對該壞盤有讀寫操作的應用。

在EMR控制臺上單擊壞盤所在集群,在集群服務頁簽找到對該壞盤有讀寫操作的EMR服務,通常包括HDFS、HBase和Kudu等存儲類服務,選擇目標服務區域的完成服務停止操作。

您也可以在該節點通過

sudo fuser -mv $device_name命令查看占用磁盤的完整進程列表,并在EMR控制臺停止列表中的服務。執行以下命令,對本地盤設置應用層讀寫隔離。

sudo chmod 000 $mount_path執行以下命令,取消掛載本地盤。

sudo umount $device_name;sudo chmod 000 $mount_path重要如果不執行取消掛載操作,在壞盤維修完成并恢復隔離后,該本地盤的對應設備名會發生變化,可能導致應用讀寫錯誤的磁盤。

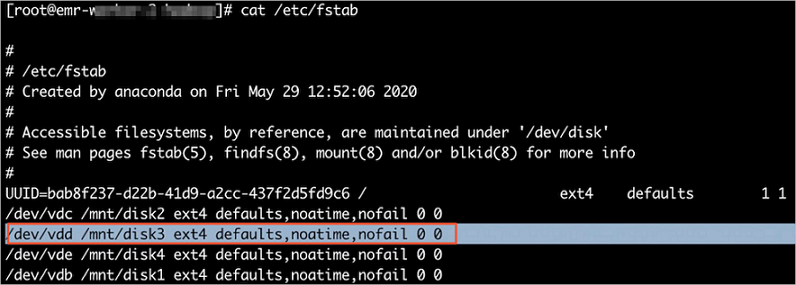

更新fstab文件。

備份已有的/etc/fstab文件。

刪除/etc/fstab文件中對應磁盤的記錄。

例如,本文示例中壞掉的磁盤是dev/vdd,所以需要刪除該磁盤對應的記錄。

啟動已停止的應用。

在壞盤所在集群的集群服務頁簽找到步驟二中停止的EMR服務,選擇目標服務區域的來啟動目標服務。

步驟三:換盤操作

在ECS控制臺上修復磁盤,詳情請參見隔離損壞的本地盤(控制臺)。

步驟四:掛載磁盤

磁盤修復完成后,需要重新掛載磁盤,便于使用新磁盤。

執行以下命令,統一設備名。

device_name=`echo "$device_name" | sed 's/x//1'`上述命令可以將類似/dev/xvdk類的目錄名歸一化,去掉x,修改為/dev/vdk。

執行以下命令,創建掛載目錄。

mkdir -p "$mount_path"執行以下命令,掛載磁盤。

mount $device_name $mount_path;sudo chmod 755 $mount_path如果掛載磁盤失敗,則可以按照以下步驟操作:

執行以下命令,格式化磁盤。

fdisk $device_name << EOF n p 1 wq EOF執行以下命令,重新掛載磁盤。

mount $device_name $mount_path;sudo chmod 755 $mount_path

執行以下命令,修改fstab文件。

echo "$device_name $mount_path $fstype defaults,noatime,nofail 0 0" >> /etc/fstab說明可以通過

which mkfs.ext4命令,確認ext4是否存在,存在的話$fstype為ext4,否則$fstype為ext3。新建腳本文件并根據集群類型選擇相應腳本代碼。

DataLake、DataFlow、OLAP、DataServing和Custom集群

while getopts p: opt do case "${opt}" in p) mount_path=${OPTARG};; esac done sudo mkdir -p $mount_path/flink sudo chown flink:hadoop $mount_path/flink sudo chmod 775 $mount_path/flink sudo mkdir -p $mount_path/hadoop sudo chown hadoop:hadoop $mount_path/hadoop sudo chmod 755 $mount_path/hadoop sudo mkdir -p $mount_path/hdfs sudo chown hdfs:hadoop $mount_path/hdfs sudo chmod 750 $mount_path/hdfs sudo mkdir -p $mount_path/yarn sudo chown root:root $mount_path/yarn sudo chmod 755 $mount_path/yarn sudo mkdir -p $mount_path/impala sudo chown impala:hadoop $mount_path/impala sudo chmod 755 $mount_path/impala sudo mkdir -p $mount_path/jindodata sudo chown root:root $mount_path/jindodata sudo chmod 755 $mount_path/jindodata sudo mkdir -p $mount_path/jindosdk sudo chown root:root $mount_path/jindosdk sudo chmod 755 $mount_path/jindosdk sudo mkdir -p $mount_path/kafka sudo chown root:root $mount_path/kafka sudo chmod 755 $mount_path/kafka sudo mkdir -p $mount_path/kudu sudo chown root:root $mount_path/kudu sudo chmod 755 $mount_path/kudu sudo mkdir -p $mount_path/mapred sudo chown root:root $mount_path/mapred sudo chmod 755 $mount_path/mapred sudo mkdir -p $mount_path/starrocks sudo chown root:root $mount_path/starrocks sudo chmod 755 $mount_path/starrocks sudo mkdir -p $mount_path/clickhouse sudo chown clickhouse:clickhouse $mount_path/clickhouse sudo chmod 755 $mount_path/clickhouse sudo mkdir -p $mount_path/doris sudo chown root:root $mount_path/doris sudo chmod 755 $mount_path/doris sudo mkdir -p $mount_path/log sudo chown root:root $mount_path/log sudo chmod 755 $mount_path/log sudo mkdir -p $mount_path/log/clickhouse sudo chown clickhouse:clickhouse $mount_path/log/clickhouse sudo chmod 755 $mount_path/log/clickhouse sudo mkdir -p $mount_path/log/kafka sudo chown kafka:hadoop $mount_path/log/kafka sudo chmod 755 $mount_path/log/kafka sudo mkdir -p $mount_path/log/kafka-rest-proxy sudo chown kafka:hadoop $mount_path/log/kafka-rest-proxy sudo chmod 755 $mount_path/log/kafka-rest-proxy sudo mkdir -p $mount_path/log/kafka-schema-registry sudo chown kafka:hadoop $mount_path/log/kafka-schema-registry sudo chmod 755 $mount_path/log/kafka-schema-registry sudo mkdir -p $mount_path/log/cruise-control sudo chown kafka:hadoop $mount_path/log/cruise-control sudo chmod 755 $mount_path/log/cruise-control sudo mkdir -p $mount_path/log/doris sudo chown doris:doris $mount_path/log/doris sudo chmod 755 $mount_path/log/doris sudo mkdir -p $mount_path/log/celeborn sudo chown hadoop:hadoop $mount_path/log/celeborn sudo chmod 755 $mount_path/log/celeborn sudo mkdir -p $mount_path/log/flink sudo chown flink:hadoop $mount_path/log/flink sudo chmod 775 $mount_path/log/flink sudo mkdir -p $mount_path/log/flume sudo chown root:root $mount_path/log/flume sudo chmod 755 $mount_path/log/flume sudo mkdir -p $mount_path/log/gmetric sudo chown root:root $mount_path/log/gmetric sudo chmod 777 $mount_path/log/gmetric sudo mkdir -p $mount_path/log/hadoop-hdfs sudo chown hdfs:hadoop $mount_path/log/hadoop-hdfs sudo chmod 755 $mount_path/log/hadoop-hdfs sudo mkdir -p $mount_path/log/hbase sudo chown hbase:hadoop $mount_path/log/hbase sudo chmod 755 $mount_path/log/hbase sudo mkdir -p $mount_path/log/hive sudo chown root:root $mount_path/log/hive sudo chmod 775 $mount_path/log/hive sudo mkdir -p $mount_path/log/impala sudo chown impala:hadoop $mount_path/log/impala sudo chmod 755 $mount_path/log/impala sudo mkdir -p $mount_path/log/jindodata sudo chown root:root $mount_path/log/jindodata sudo chmod 777 $mount_path/log/jindodata sudo mkdir -p $mount_path/log/jindosdk sudo chown root:root $mount_path/log/jindosdk sudo chmod 777 $mount_path/log/jindosdk sudo mkdir -p $mount_path/log/kyuubi sudo chown kyuubi:hadoop $mount_path/log/kyuubi sudo chmod 755 $mount_path/log/kyuubi sudo mkdir -p $mount_path/log/presto sudo chown presto:hadoop $mount_path/log/presto sudo chmod 755 $mount_path/log/presto sudo mkdir -p $mount_path/log/spark sudo chown spark:hadoop $mount_path/log/spark sudo chmod 755 $mount_path/log/spark sudo mkdir -p $mount_path/log/sssd sudo chown sssd:sssd $mount_path/log/sssd sudo chmod 750 $mount_path/log/sssd sudo mkdir -p $mount_path/log/starrocks sudo chown starrocks:starrocks $mount_path/log/starrocks sudo chmod 755 $mount_path/log/starrocks sudo mkdir -p $mount_path/log/taihao_exporter sudo chown taihao:taihao $mount_path/log/taihao_exporter sudo chmod 755 $mount_path/log/taihao_exporter sudo mkdir -p $mount_path/log/trino sudo chown trino:hadoop $mount_path/log/trino sudo chmod 755 $mount_path/log/trino sudo mkdir -p $mount_path/log/yarn sudo chown hadoop:hadoop $mount_path/log/yarn sudo chmod 755 $mount_path/log/yarn數據湖(Hadoop)集群

while getopts p: opt do case "${opt}" in p) mount_path=${OPTARG};; esac done mkdir -p $mount_path/data chown hdfs:hadoop $mount_path/data chmod 1777 $mount_path/data mkdir -p $mount_path/hadoop chown hadoop:hadoop $mount_path/hadoop chmod 775 $mount_path/hadoop mkdir -p $mount_path/hdfs chown hdfs:hadoop $mount_path/hdfs chmod 755 $mount_path/hdfs mkdir -p $mount_path/yarn chown hadoop:hadoop $mount_path/yarn chmod 755 $mount_path/yarn mkdir -p $mount_path/kudu/master chown kudu:hadoop $mount_path/kudu/master chmod 755 $mount_path/kudu/master mkdir -p $mount_path/kudu/tserver chown kudu:hadoop $mount_path/kudu/tserver chmod 755 $mount_path/kudu/tserver mkdir -p $mount_path/log chown hadoop:hadoop $mount_path/log chmod 775 $mount_path/log mkdir -p $mount_path/log/hadoop-hdfs chown hdfs:hadoop $mount_path/log/hadoop-hdfs chmod 775 $mount_path/log/hadoop-hdfs mkdir -p $mount_path/log/hadoop-yarn chown hadoop:hadoop $mount_path/log/hadoop-yarn chmod 755 $mount_path/log/hadoop-yarn mkdir -p $mount_path/log/hadoop-mapred chown hadoop:hadoop $mount_path/log/hadoop-mapred chmod 755 $mount_path/log/hadoop-mapred mkdir -p $mount_path/log/kudu chown kudu:hadoop $mount_path/log/kudu chmod 755 $mount_path/log/kudu mkdir -p $mount_path/run chown hadoop:hadoop $mount_path/run chmod 777 $mount_path/run mkdir -p $mount_path/tmp chown hadoop:hadoop $mount_path/tmp chmod 777 $mount_path/tmp執行以下命令運行腳本文件創建服務目錄并刪除腳本,

$file_path為腳本文件路徑。chmod +x $file_path sudo $file_path -p $mount_path rm $file_path使用新磁盤。

在EMR控制臺重啟在該節點上運行的服務,并檢查磁盤是否正常使用。

> 停止

> 停止