安裝和使用Deepytorch Inference

Deepytorch Inference是阿里云自研的AI推理加速器,針對Torch模型,可提供顯著的推理加速能力。本文主要介紹安裝并使用Deepytorch Inference的操作方法,以及推理效果展示。

背景信息

Deepytorch Inference通過調(diào)用deepytorch_inference.compile(model)接口即可實現(xiàn)推理性能加速。使用Deepytorch Inference前,您需要先使用torch.jit.script或者torch.jit.trace接口,將PyTorch模型轉(zhuǎn)換為TorchScript模型,更多信息,請參見PyTorch官方文檔。

本文將為您提供分別使用torch.jit.script和torch.jit.trace接口實現(xiàn)推理性能加速的示例,更多信息,請參見推理性能效果展示。

安裝Deepytorch Inference

安裝Deepytorch Inference前,請確認您已創(chuàng)建配備了NVIDIA GPU卡的GPU實例(即A10、V100或T4 GPU)。

連接GPU實例后,使用pip工具安裝指定版本的torch(例如2.0.1版本)和Deepytorch Inference軟件包,其中,DeepyTorch Inference的軟件包可以通過PyPI進行分發(fā)和安裝,方便開發(fā)者通過簡單的命令行工具安裝和管理軟件。

如需選擇特定版本的Deepytorch Inference軟件包,則需從deepytorch inference中選擇該版本的whl包。例如,需要python 3.8+pytorch 1.13+cuda 11.7版本的Deepytorch Inference軟件包,則直接下載deepytorch_inference(python 3.8+pt 1.13.1+cuda 117)即可。

pip install torch==2.0.1 deepytorch-inference -f https://aiacc-inference-public-v2.oss-cn-hangzhou.aliyuncs.com/aiacc-inference-torch/stable-diffusion/aiacctorch_stable-diffusion.html使用Deepytorch Inference

您僅需要在模型的推理腳本中增加如下代碼,即可啟用Deepytorch Inference的推理優(yōu)化功能,增加的代碼如下所示:

import deepytorch_inference # 導入deepytorch_inference軟件包deepytorch_inference.compile(mod_jit) # 進行編譯

推理性能效果展示

基于不同模型,為您展示使用Deepytorch Inference的推理性能效果,實際的推理加速效果與模型、GPU機型等因素有關,本文以A10機型(例如gn7i、ebmgn7i或ebmgn7ix)為例進行推理測試。關于模型支持情況,請參見模型支持情況。

基于ResNet50模型執(zhí)行推理

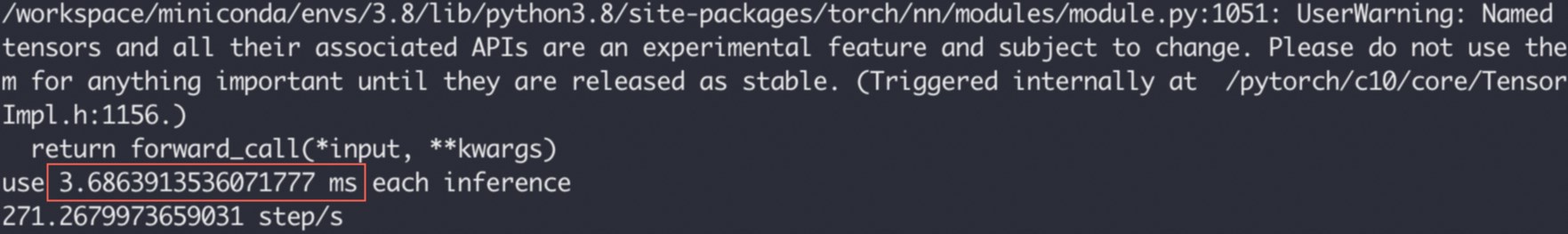

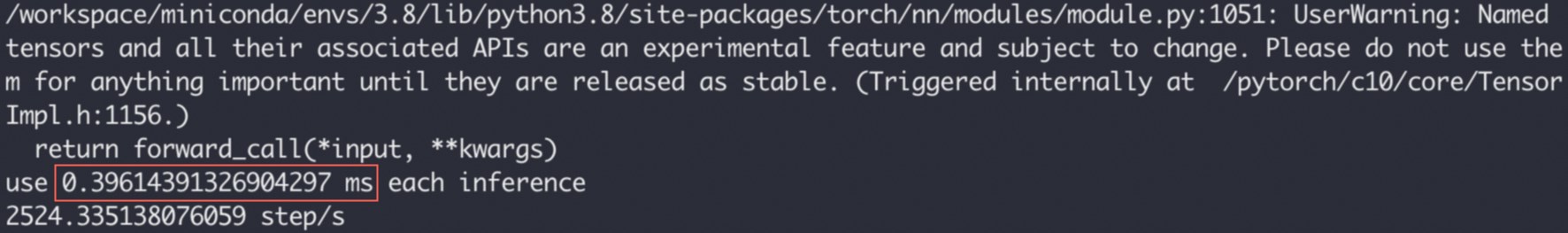

以下示例將基于ResNet50模型,并調(diào)用torch.jit.script接口執(zhí)行推理任務,執(zhí)行1000次后取平均時間,將推理耗時從3.686 ms降低至0.396 ms以內(nèi)。

原始版本

原始代碼如下所示:

import time import torch import torchvision.models as models mod = models.resnet50(pretrained=True).eval() mod_jit = torch.jit.script(mod) mod_jit = mod_jit.cuda() in_t = torch.randn([1, 3, 224, 224]).float().cuda() # Warming up for _ in range(10): mod_jit(in_t) inference_count = 1000 # inference test start = time.time() for _ in range(inference_count): mod_jit(in_t) end = time.time() print(f"use {(end-start)/inference_count*1000} ms each inference") print(f"{inference_count/(end-start)} step/s")執(zhí)行結(jié)果如下,顯示推理耗時大約為3.686 ms。

加速版本

僅需要在原始版本代碼中插入如下代碼即可實現(xiàn)推理性能加速:

import deepytorch_inference

deepytorch_inference.compile(mod_jit)

更新后的代碼如下:

import time import deepytorch_inference # 導入deepytorch_inference軟件包 import torch import torchvision.models as models mod = models.resnet50(pretrained=True).eval() mod_jit = torch.jit.script(mod) mod_jit = mod_jit.cuda() mod_jit = deepytorch_inference.compile(mod_jit) # 進行編譯 in_t = torch.randn([1, 3, 224, 224]).float().cuda() # Warming up for _ in range(10): mod_jit(in_t) inference_count = 1000 # inference test start = time.time() for _ in range(inference_count): mod_jit(in_t) end = time.time() print(f"use {(end-start)/inference_count*1000} ms each inference") print(f"{inference_count/(end-start)} step/s")執(zhí)行結(jié)果如下,顯示推理耗時為0.396 ms。相較于之前的3.686 ms,推理性能有了顯著提升。

基于Bert-Base模型執(zhí)行推理

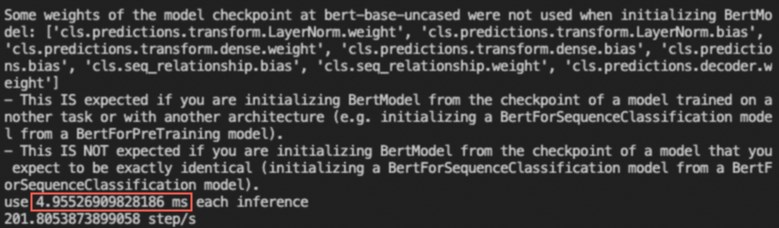

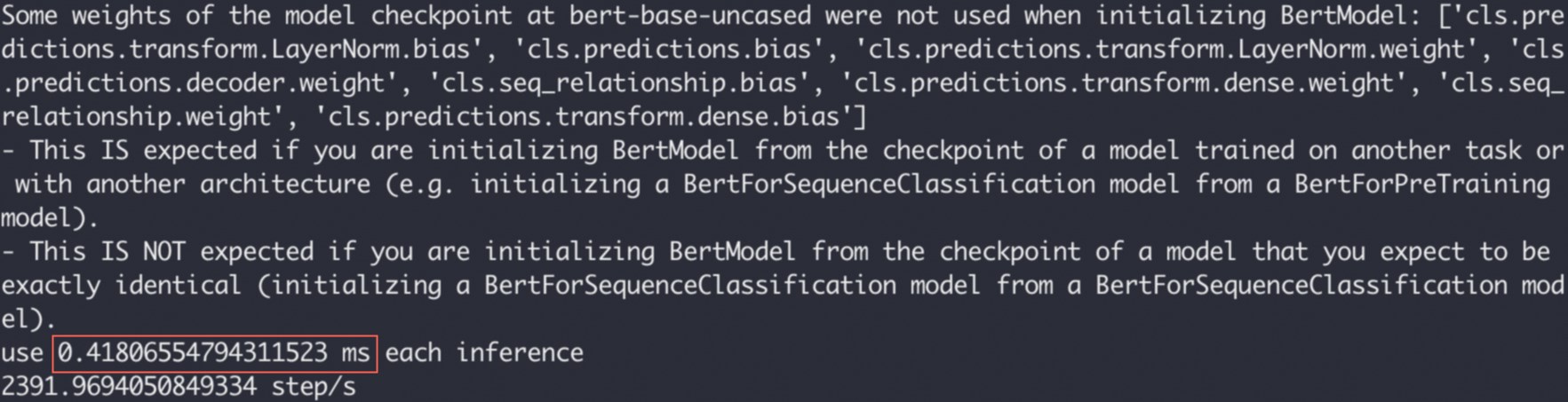

以下示例將基于Bert-Base模型,并調(diào)用torch.jit.trace接口執(zhí)行推理任務,將推理耗時從4.955 ms降低至0.418 ms以內(nèi)。

執(zhí)行以下命令,安裝transformers包。

pip install transformers分別運行原始版本和加速版本的Demo,并查看運行結(jié)果。

原始版本

原始代碼如下所示:

from transformers import BertModel, BertTokenizer, BertConfig import torch import time enc = BertTokenizer.from_pretrained("bert-base-uncased") # Tokenizing input text text = "[CLS] Who was Jim Henson ? [SEP] Jim Henson was a puppeteer [SEP]" tokenized_text = enc.tokenize(text) # Masking one of the input tokens masked_index = 8 tokenized_text[masked_index] = '[MASK]' indexed_tokens = enc.convert_tokens_to_ids(tokenized_text) segments_ids = [1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, ] # Creating a dummy input tokens_tensor = torch.tensor([indexed_tokens]).cuda() segments_tensors = torch.tensor([segments_ids]).cuda() dummy_input = [tokens_tensor, segments_tensors] # Initializing the model with the torchscript flag # Flag set to True even though it is not necessary as this model does not have an LM Head. config = BertConfig(vocab_size_or_config_json_file=32000, hidden_size=768, num_hidden_layers=12, num_attention_heads=12, intermediate_size=3072, torchscript=True) # Instantiating the model model = BertModel(config) # The model needs to be in evaluation mode model.eval() # If you are instantiating the model with `from_pretrained` you can also easily set the TorchScript flag model = BertModel.from_pretrained("bert-base-uncased", torchscript=True) model = model.eval().cuda() # Creating the trace traced_model = torch.jit.trace(model, dummy_input) # Warming up for _ in range(10): all_encoder_layers, pooled_output = traced_model(*dummy_input) inference_count = 1000 # inference test start = time.time() for _ in range(inference_count): traced_model(*dummy_input) end = time.time() print(f"use {(end-start)/inference_count*1000} ms each inference") print(f"{inference_count/(end-start)} step/s")執(zhí)行結(jié)果如下,顯示推理耗時大約為4.955 ms。

加速版本

僅需要在原始版本代碼中插入如下代碼即可實現(xiàn)推理性能加速:

import deepytorch_inference

deepytorch_inference.compile(traced_model)

更新后的代碼如下:

from transformers import BertModel, BertTokenizer, BertConfig import torch import deepytorch_inference # 導入deepytorch-inference軟件包 import time enc = BertTokenizer.from_pretrained("bert-base-uncased") # Tokenizing input text text = "[CLS] Who was Jim Henson ? [SEP] Jim Henson was a puppeteer [SEP]" tokenized_text = enc.tokenize(text) # Masking one of the input tokens masked_index = 8 tokenized_text[masked_index] = '[MASK]' indexed_tokens = enc.convert_tokens_to_ids(tokenized_text) segments_ids = [1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, ] # Creating a dummy input tokens_tensor = torch.tensor([indexed_tokens]).cuda() segments_tensors = torch.tensor([segments_ids]).cuda() dummy_input = [tokens_tensor, segments_tensors] # Initializing the model with the torchscript flag # Flag set to True even though it is not necessary as this model does not have an LM Head. config = BertConfig(vocab_size_or_config_json_file=32000, hidden_size=768, num_hidden_layers=12, num_attention_heads=12, intermediate_size=3072, torchscript=True) # Instantiating the model model = BertModel(config) # The model needs to be in evaluation mode model.eval() # If you are instantiating the model with `from_pretrained` you can also easily set the TorchScript flag model = BertModel.from_pretrained("bert-base-uncased", torchscript=True) model = model.eval().cuda() # Creating the trace traced_model = torch.jit.trace(model, dummy_input) traced_model = deepytorch_inference.compile(traced_model) # 進行編譯 # Warming up for _ in range(10): all_encoder_layers, pooled_output = traced_model(*dummy_input) inference_count = 1000 # inference test start = time.time() for _ in range(inference_count): traced_model(*dummy_input) end = time.time() print(f"use {(end-start)/inference_count*1000} ms each inference") print(f"{inference_count/(end-start)} step/s")執(zhí)行結(jié)果如下,顯示推理耗時為0.418 ms。相較于之前的4.955 ms,推理性能有了顯著提升。

基于ResNet50模型執(zhí)行動態(tài)尺寸推理

在Deepytorch Inference中,您無需關心動態(tài)尺寸的問題,Deepytorch Inference能夠支持不同的輸入尺寸。以下示例基于ResNet50模型,輸入3個不同的長寬尺寸,帶您體驗使用Deepytorch Inference進行推理加速的過程。

import time

import torch

import deepytorch_inference # 導入deepytorch-inference軟件包

import torchvision.models as models

mod = models.resnet50(pretrained=True).eval()

mod_jit = torch.jit.script(mod)

mod_jit = mod_jit.cuda()

mod_jit = deepytorch_inference.compile(mod_jit) # 進行編譯

in_t = torch.randn([1, 3, 224, 224]).float().cuda()

in_2t = torch.randn([1, 3, 448, 448]).float().cuda()

in_3t = torch.randn([16, 3, 640, 640]).float().cuda()

# Warming up

for _ in range(10):

mod_jit(in_t)

mod_jit(in_3t)

inference_count = 1000

# inference test

start = time.time()

for _ in range(inference_count):

mod_jit(in_t)

mod_jit(in_2t)

mod_jit(in_3t)

end = time.time()

print(f"use {(end-start)/(inference_count*3)*1000} ms each inference")

print(f"{inference_count/(end-start)} step/s")

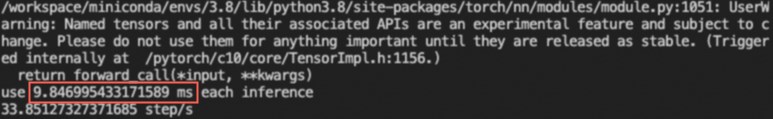

執(zhí)行結(jié)果如下,顯示推理耗時大約為9.85 ms。

為了縮短模型編譯的時間,應在warming up階段推理最大及最小的tensor尺寸,避免在執(zhí)行時重復編譯。例如,已知推理尺寸在1×3×224×224至16×3×640×640之間時,應在warming up時推理這兩個尺寸。