使用OSS Connector for AI/ML高效完成數(shù)據(jù)訓(xùn)練任務(wù)

本文將為您詳細(xì)介紹如何快速使用OSS Connector for AI/ML來高效配合數(shù)據(jù)模型的創(chuàng)建以及訓(xùn)練工作。

部署環(huán)境

操作系統(tǒng):Linux x86-64

glibc:>=2.17

Python:3.8-3.12

PyTorch: >=2.0

使用OSS Checkpoint功能需Linux內(nèi)核支持userfaultfd

說明以Ubuntu系統(tǒng)為例,您可以執(zhí)行

sudo grep CONFIG_USERFAULTFD /boot/config-$(uname -r)命令確認(rèn)Linux是否支持userfaultfd,當(dāng)返回結(jié)果中顯示CONFIG_USERFAULTFD=y時(shí),則表示內(nèi)核支持。返回結(jié)果顯示CONFIG_USERFAULTFD=n時(shí),則表示內(nèi)核不支持,即無法使用OSS Checkpoint功能。

快速安裝

以下內(nèi)容為Python3.12版本安裝OSS Connector for AI/ML示例:

在Linux操作系統(tǒng)或基于Linux操作系統(tǒng)構(gòu)建鏡像所生成容器空間內(nèi),執(zhí)行

pip3.12 install osstorchconnector命令安裝OSS Connector for AI/ML。pip3.12 install osstorchconnector執(zhí)行

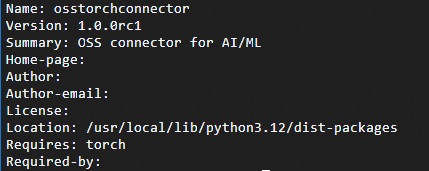

pip3.12 show osstorchconnector查看是否安裝成功。pip3.12 show osstorchconnector當(dāng)返回結(jié)果中顯示osstorchconnector的版本信息時(shí)表示OSS Connector for AI/ML安裝成功。

配置

創(chuàng)建訪問憑證配置文件。

mkdir -p /root/.alibabacloud && touch /root/.alibabacloud/credentials添加訪問憑證配置并保存。

示例中的

<Access-key-id>、<Access-key-secret>請(qǐng)分別替換為RAM用戶的AccessKey ID、AccessKeySecret。關(guān)于如何創(chuàng)建AccessKey ID和AccessKeySecret,請(qǐng)參見創(chuàng)建AccessKey,配置項(xiàng)說明以及使用臨時(shí)訪問憑證配置請(qǐng)參見配置訪問憑證。{ "AccessKeyId": "LTAI************************", "AccessKeySecret": "At32************************" }創(chuàng)建OSS Connector配置文件。

mkdir -p /etc/oss-connector/ && touch /etc/oss-connector/config.json添加OSS Connector相關(guān)配置并保存。配置項(xiàng)說明請(qǐng)參見配置OSS Connector。

正常情況下使用以下默認(rèn)配置即可。

{ "logLevel": 1, "logPath": "/var/log/oss-connector/connector.log", "auditPath": "/var/log/oss-connector/audit.log", "datasetConfig": { "prefetchConcurrency": 24, "prefetchWorker": 2 }, "checkpointConfig": { "prefetchConcurrency": 24, "prefetchWorker": 4, "uploadConcurrency": 64 } }

示例

以下示例旨在使用PyTorch創(chuàng)建一個(gè)手寫數(shù)字識(shí)別模型。該模型使用由OssMapDataset構(gòu)建的MNIST數(shù)據(jù)集,同時(shí)借助OssCheckpoint來實(shí)現(xiàn)模型檢查點(diǎn)的保存和加載。

import io

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision.transforms as transforms

from PIL import Image

from torch.utils.data import DataLoader

from osstorchconnector import OssMapDataset

from osstorchconnector import OssCheckpoint

# 定義超參數(shù)。

EPOCHS = 1

BATCH_SIZE = 64

LEARNING_RATE = 0.001

CHECKPOINT_READ_URI = "oss://you_bucketname/epoch.ckpt" # 讀取OSS中檢查點(diǎn)的地址。

CHECKPOINT_WRITE_URI = "oss://you_bucketname/epoch.ckpt" # 保存檢查點(diǎn)到OSS的地址。

ENDPOINT = "oss-cn-hangzhou-internal.aliyuncs.com" # 訪問OSS的內(nèi)網(wǎng)地域節(jié)點(diǎn)地址,使用此地址需ECS實(shí)例與OSS實(shí)例處于同一地域。

CONFIG_PATH = "/etc/oss-connector/config.json" # OSS Connector配置文件路徑。

CRED_PATH = "/root/.alibabacloud/credentials" # 訪問憑證配置文件路徑。

OSS_URI = "oss://you_bucketname/mninst/" # OSS中Bucket資源路徑地址。

# 創(chuàng)建OssCheckpoint對(duì)象,用于訓(xùn)練過程中將檢查點(diǎn)保存到OSS以及從OSS中讀取檢查點(diǎn)。

checkpoint = OssCheckpoint(endpoint=ENDPOINT, cred_path=CRED_PATH, config_path=CONFIG_PATH)

# 定義簡(jiǎn)單的卷積神經(jīng)網(wǎng)絡(luò)。

class SimpleCNN(nn.Module):

def __init__(self):

super(SimpleCNN, self).__init__()

self.conv1 = nn.Conv2d(1, 32, kernel_size=3, stride=1, padding=1)

self.conv2 = nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1)

input_size = 224

after_conv1 = (input_size - 3 + 2*1)

after_pool1 = after_conv1 // 2

after_conv2 = (after_pool1 - 3 + 2*1) // 1 + 1

after_pool2 = after_conv2 // 2

flattened_size = 64 * after_pool2 * after_pool2

self.fc1 = nn.Linear(flattened_size, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = nn.ReLU()(self.conv1(x))

x = nn.MaxPool2d(2)(x)

x = nn.ReLU()(self.conv2(x))

x = nn.MaxPool2d(2)(x)

x = x.view(x.size(0), -1)

x = nn.ReLU()(self.fc1(x))

x = self.fc2(x)

return x

# 數(shù)據(jù)預(yù)處理。

trans = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.5], std=[0.5])

])

def transform(object):

try:

img = Image.open(io.BytesIO(object.read())).convert('L')

val = trans(img)

except Exception as e:

raise e

label = 0

return val, torch.tensor(label)

# 加載OssMapDataset數(shù)據(jù)集。

train_dataset = OssMapDataset.from_prefix(OSS_URI, endpoint=ENDPOINT, transform=transform, cred_path=CRED_PATH, config_path=CONFIG_PATH)

train_loader = DataLoader(train_dataset, batch_size=BATCH_SIZE, num_workers=32, prefetch_factor=2,shuffle=True)

# 初始化模型、損失函數(shù)與優(yōu)化器。

model = SimpleCNN()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=LEARNING_RATE)

# 訓(xùn)練模型。

for epoch in range(EPOCHS):

for i, (images, labels) in enumerate(train_loader):

optimizer.zero_grad()

outputs = model(images)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

if (i + 1) % 100 == 0:

print(f'Epoch [{epoch + 1}/{EPOCHS}], Step [{i + 1}/{len(train_loader)}], Loss: {loss.item():.4f}')

# 使用OssCheckpoint對(duì)象保存檢查點(diǎn)。

with checkpoint.writer(CHECKPOINT_WRITE_URI) as writer:

torch.save(model.state_dict(), writer)

print("-------------------------")

print(model.state_dict)

# 使用OssCheckpoint對(duì)象讀取檢查點(diǎn)。

with checkpoint.reader(CHECKPOINT_READ_URI) as reader:

state_dict = torch.load(reader)

# 加載模型。

model = SimpleCNN()

model.load_state_dict(state_dict)

model.eval()