文件存儲 HDFS 版和對象存儲OSS雙向數(shù)據(jù)遷移

本文檔介紹文件存儲 HDFS 版和對象存儲OSS之間的數(shù)據(jù)遷移操作過程。您可以將文件存儲 HDFS 版數(shù)據(jù)遷移到對象存儲OSS,也可以將對象存儲OSS的數(shù)據(jù)遷移到文件存儲 HDFS 版。

前提條件

已開通文件存儲 HDFS 版服務(wù)并創(chuàng)建文件系統(tǒng)實(shí)例和掛載點(diǎn)。具體操作,請參見文件存儲HDFS版快速入門。

已搭建Hadoop集群并且所有集群節(jié)點(diǎn)已安裝JDK,JDK版本不低于1.8。建議您使用的Hadoop版本不低于2.7.2,本文檔中使用的Hadoop版本為Apache Hadoop 2.8.5。

背景信息

阿里云文件存儲 HDFS 版是面向阿里云ECS實(shí)例及容器服務(wù)等計算資源的文件存儲服務(wù)。文件存儲 HDFS 版允許您就像在Hadoop的分布式文件系統(tǒng)中一樣管理和訪問數(shù)據(jù),并對熱數(shù)據(jù)提供高性能的數(shù)據(jù)訪問能力。對象存儲OSS是海量、安全、低成本、高可靠的云存儲服務(wù),提供標(biāo)準(zhǔn)型、歸檔型等多種存儲類型。您可以在文件存儲 HDFS 版和對象存儲OSS之間雙向數(shù)據(jù)遷移,從而實(shí)現(xiàn)熱、溫、冷數(shù)據(jù)合理分層,在實(shí)現(xiàn)對熱數(shù)據(jù)的高性能訪問的同時,有效控制存儲成本。

步驟一:Hadoop集群掛載文件存儲 HDFS 版實(shí)例

在Hadoop集群中配置文件存儲 HDFS 版實(shí)例。具體操作,請參見掛載文件存儲 HDFS 版文件系統(tǒng)。

步驟二:Hadoop集群部署JindoSDK

下載最新的JindoSDK安裝包。

本文以jindosdk-4.5.0為例。

執(zhí)行以下命令,解壓安裝包。

tar -zxvf ./jindosdk-4.5.0.tar.gz執(zhí)行以下命令,將安裝包內(nèi)的jindo-core-4.5.0.jar和jindo-sdk-4.5.0.jar文件復(fù)制到Hadoop的CLASSPATH路徑下。

cp -v ./jindosdk-4.5.0/lib/jindo-*-4.5.0.jar ${HADOOP_HOME}/share/hadoop/hdfs/lib/配置JindoSDK OSS實(shí)現(xiàn)類及Access Key。

執(zhí)行以下命令,打開core-site.xml配置文件。

vim ${HADOOP_HOME}/etc/hadoop/core-site.xml將JindoSDK OSS實(shí)現(xiàn)類配置到core-site.xml文件中。

說明以下配置添加至<configuration>標(biāo)簽內(nèi)。

<property> <name>fs.AbstractFileSystem.oss.impl</name> <value>com.aliyun.jindodata.oss.OSS</value> </property> <property> <name>fs.oss.impl</name> <value>com.aliyun.jindodata.oss.JindoOssFileSystem</value> </property>將OSS Bucket對應(yīng)的Access Key ID、Access Key Secret、Endpoint配置到core-site.xml文件中。

說明以下配置添加至<configuration>標(biāo)簽內(nèi)。

<property> <name>fs.oss.accessKeyId</name> <!-- 請?zhí)顚懩糜谠L問OSS Bucket的Access Key ID --> <value>xxx</value> </property> <property> <name>fs.oss.accessKeySecret</name> <!-- 請?zhí)顚慉ccess Key ID對應(yīng)的Access Key Secret --> <value>xxx</value> </property> <property> <name>fs.oss.endpoint</name> <!-- ECS環(huán)境推薦使用內(nèi)網(wǎng)OSS Endpoint,格式為oss-cn-xxxx-internal.aliyuncs.com --> <value>oss-cn-xxxx.aliyuncs.com</value> </property>

使用Hadoop Shell訪問OSS。

${HADOOP_HOME}/bin/hadoop fs -ls oss://<bucket>/<path>其中,

<bucket>為OSS的存儲空間名稱,<path>為該存儲空間下的文件路徑。請根據(jù)實(shí)際情況進(jìn)行替換。

步驟三:數(shù)據(jù)遷移

為Hadoop集群掛載好文件存儲 HDFS 版實(shí)例和安裝OSS客戶端Jindo SDK后,使用Hadoop MapReduce任務(wù)(DistCp)即可實(shí)現(xiàn)數(shù)據(jù)遷移。遷移數(shù)據(jù)的操作如下所示。

實(shí)踐一:將文件存儲 HDFS 版上的數(shù)據(jù)遷移至對象存儲OSS

執(zhí)行以下命令,在文件存儲 HDFS 版實(shí)例上生成100 GB測試數(shù)據(jù)。

${HADOOP_HOME}/bin/hadoop jar \ ${HADOOP_HOME}/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.8.5.jar \ randomtextwriter \ -D mapreduce.randomtextwriter.totalbytes=107374182400 \ -D mapreduce.randomtextwriter.bytespermap=10737418240 \ dfs://f-xxxxxxx.cn-beijing.dfs.aliyuncs.com:10290/dfs2oss/data/data_100g/重要參數(shù)說明如下所示,請根據(jù)實(shí)際情況進(jìn)行替換。

f-xxxxxxx.cn-beijing.dfs.aliyuncs.com:文件存儲 HDFS 版掛載點(diǎn)域名。/dfs2oss/data/data_100g:本文保存測試數(shù)據(jù)的目錄。hadoop-mapreduce-examples-2.8.5.jar:hadoop版本。

查看生成的測試數(shù)據(jù)。

執(zhí)行命令

${HADOOP_HOME}/bin/hadoop fs -du -s dfs://f-xxxxxxx.cn-beijing.dfs.aliyuncs.com:10290/dfs2oss/data/data_100g返回示例

110223430221 dfs://f-xxxxxxx.cn-beijing.dfs.aliyuncs.com:10290/dfs2oss/data/data_100g

啟動Hadoop MapReduce任務(wù)(DistCp)將測試數(shù)據(jù)遷移至對象存儲OSS。關(guān)于DistCp工具的使用說明,請參見Hadoop Distcp工具官方說明。

${HADOOP_HOME}/bin/hadoop distcp \ dfs://f-xxxxxxx.cn-beijing.dfs.aliyuncs.com:10290/dfs2oss oss://<bucket>/<path>任務(wù)執(zhí)行完成后,如果回顯包含如下類似信息,說明遷移成功。

22/08/10 16:20:54 INFO mapreduce.Job: Job job_1660115355800_0003 completed successfully 22/08/10 16:20:54 INFO mapreduce.Job: Counters: 38 File System Counters DFS: Number of bytes read=110223438504 DFS: Number of bytes written=0 DFS: Number of read operations=114 DFS: Number of large read operations=0 DFS: Number of write operations=26 FILE: Number of bytes read=0 FILE: Number of bytes written=2119991 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 OSS: Number of bytes read=0 OSS: Number of bytes written=0 OSS: Number of read operations=0 OSS: Number of large read operations=0 OSS: Number of write operations=0 Job Counters Launched map tasks=13 Other local map tasks=13 Total time spent by all maps in occupied slots (ms)=10264552 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=10264552 Total vcore-milliseconds taken by all map tasks=10264552 Total megabyte-milliseconds taken by all map tasks=10510901248 Map-Reduce Framework Map input records=14 Map output records=0 Input split bytes=1755 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=18918 CPU time spent (ms)=798190 Physical memory (bytes) snapshot=4427018240 Virtual memory (bytes) snapshot=50057256960 Total committed heap usage (bytes)=2214068224 File Input Format Counters Bytes Read=6528 File Output Format Counters Bytes Written=0 DistCp Counters Bytes Copied=110223430221 Bytes Expected=110223430221 Files Copied=14查看遷移到對象存儲OSS上的數(shù)據(jù)大小是否與原文件一致。

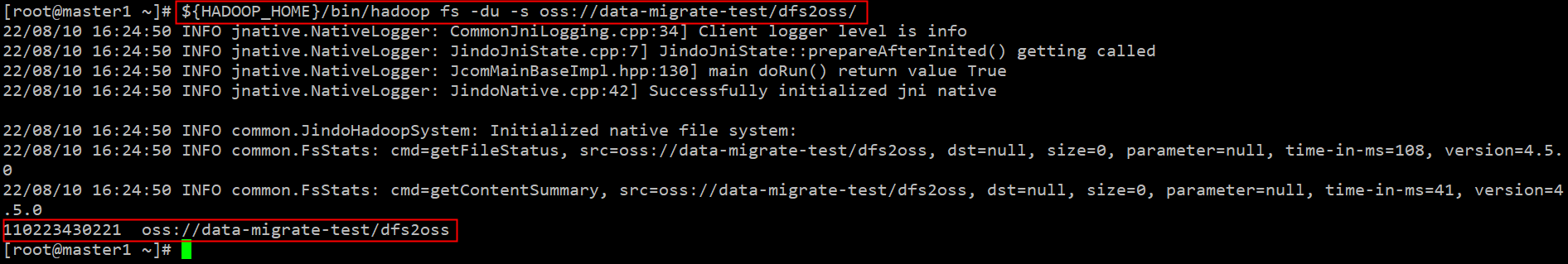

執(zhí)行命令

${HADOOP_HOME}/bin/hadoop fs -du -s oss://<bucket>/<path>返回示例

實(shí)踐二:將對象存儲OSS上的數(shù)據(jù)遷移至文件存儲 HDFS 版

在對象存儲OSS上生成100 GB測試數(shù)據(jù)。

${HADOOP_HOME}/bin/hadoop jar \ ${HADOOP_HOME}/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.8.5.jar \ randomtextwriter \ -D mapreduce.randomtextwriter.totalbytes=107374182400 \ -D mapreduce.randomtextwriter.bytespermap=10737418240 \ oss://<bucket>/<path>重要參數(shù)說明如下所示,請根據(jù)實(shí)際情況進(jìn)行替換。

<bucket>/<path>:測試數(shù)據(jù)OSS Bucket目錄。hadoop-mapreduce-examples-2.8.5.jar:hadoop版本。

查看生成的測試數(shù)據(jù)。

執(zhí)行命令

${HADOOP_HOME}/bin/hadoop fs -du -s oss://<bucket>/<path>返回示例

啟動Hadoop MapReduce任務(wù)(DistCp)將測試數(shù)據(jù)遷移至文件存儲 HDFS 版。

${HADOOP_HOME}/bin/hadoop distcp \ oss://<bucket>/<path> \ dfs://f-xxxxxxx.cn-beijing.dfs.aliyuncs.com:10290/oss2dfs任務(wù)執(zhí)行完成后,如果回顯包含如下類似信息,說明遷移成功。

22/08/10 17:08:17 INFO mapreduce.Job: Job job_1660115355800_0005 completed successfully 22/08/10 17:08:17 INFO mapreduce.Job: Counters: 38 File System Counters DFS: Number of bytes read=6495 DFS: Number of bytes written=110223424482 DFS: Number of read operations=139 DFS: Number of large read operations=0 DFS: Number of write operations=47 FILE: Number of bytes read=0 FILE: Number of bytes written=1957682 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 OSS: Number of bytes read=0 OSS: Number of bytes written=0 OSS: Number of read operations=0 OSS: Number of large read operations=0 OSS: Number of write operations=0 Job Counters Launched map tasks=12 Other local map tasks=12 Total time spent by all maps in occupied slots (ms)=10088860 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=10088860 Total vcore-milliseconds taken by all map tasks=10088860 Total megabyte-milliseconds taken by all map tasks=10330992640 Map-Reduce Framework Map input records=12 Map output records=0 Input split bytes=1620 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=21141 CPU time spent (ms)=780270 Physical memory (bytes) snapshot=4037222400 Virtual memory (bytes) snapshot=45695025152 Total committed heap usage (bytes)=2259156992 File Input Format Counters Bytes Read=4875 File Output Format Counters Bytes Written=0 DistCp Counters Bytes Copied=110223424482 Bytes Expected=110223424482 Files Copied=12檢查遷移到文件存儲 HDFS 版的測試數(shù)據(jù)是否與原OSS待遷移數(shù)據(jù)一致。

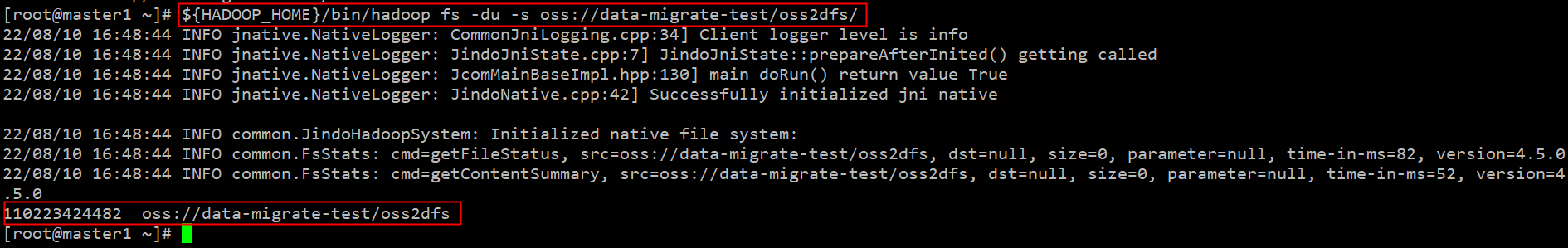

執(zhí)行命令

${HADOOP_HOME}/bin/hadoop fs -du -s dfs://f-xxxxx.cn-zhangjiakou.dfs.aliyuncs.com:10290/oss2dfs返回示例

110223424482 dfs://f-xxxxx.cn-beijing.dfs.aliyuncs.com:10290/oss2dfs

常見問題

對于正在寫入的文件,進(jìn)行遷移時會遺漏最新寫入的數(shù)據(jù)嗎?

Hadoop兼容文件系統(tǒng)提供單寫者多讀者并發(fā)語義,針對同一個文件,同一時刻可以有一個寫者寫入和多個讀者讀出。以文件存儲 HDFS 版到對象存儲OSS的數(shù)據(jù)遷移為例,數(shù)據(jù)遷移任務(wù)打開文件存儲 HDFS 版的文件F,根據(jù)當(dāng)前系統(tǒng)狀態(tài)決定文件F的長度L,將L字節(jié)遷移到對象存儲OSS。如果在數(shù)據(jù)遷移過程中,有并發(fā)的寫者寫入,文件F的長度將超過L,但是數(shù)據(jù)遷移任務(wù)無法感知到最新寫入的數(shù)據(jù)。因此,建議當(dāng)您在做數(shù)據(jù)遷移時,請避免往遷移的文件中寫入數(shù)據(jù)。