使用ECI運行Spark作業(yè)

在Kubernetes集群中使用ECI來運行Spark作業(yè)具有彈性伸縮、自動化部署、高可用性等優(yōu)勢,可以提高Spark作業(yè)的運行效率和穩(wěn)定性。本文介紹如何在ACK Serverless集群中安裝Spark Operator,使用ECI來運行Spark作業(yè)。

背景信息

Apache Spark是一個在數(shù)據(jù)分析領(lǐng)域廣泛使用的開源項目,它常被應(yīng)用于眾所周知的大數(shù)據(jù)和機器學(xué)習(xí)工作負載中。從Apache Spark 2.3.0版本開始,您可以在Kubernetes上運行和管理Spark資源。

Spark Operator是專門針對Spark on Kubernetes設(shè)計的Operator,開發(fā)者可以通過使用CRD的方式,提交Spark任務(wù)到Kubernetes集群中。使用Spark Operator有以下優(yōu)勢:

能夠彌補原生Spark對Kubernetes支持不足的部分。

能夠快速和Kubernetes生態(tài)中的存儲、監(jiān)控、日志等組件對接。

支持故障恢復(fù)、彈性伸縮、調(diào)度優(yōu)化等高階Kubernetes特性。

準備工作

創(chuàng)建ACK Serverless集群。

在容器服務(wù)管理控制臺上創(chuàng)建ACK Serverless集群。具體操作,請參見創(chuàng)建ACK Serverless集群。

重要如果您需要通過公網(wǎng)拉取鏡像,或者訓(xùn)練任務(wù)需要訪問公網(wǎng),請配置公網(wǎng)NAT網(wǎng)關(guān)。

您可以通過kubectl管理和訪問ACK Serverless集群,相關(guān)操作如下:

如果您需要通過本地計算機管理集群,請安裝并配置kubectl客戶端。具體操作,請參見通過kubectl連接Kubernetes集群。

您也可以在CloudShell上通過kubect管理集群。具體操作,請參見在CloudShell上通過kubectl管理Kubernetes集群。

創(chuàng)建OSS存儲空間。

您需要創(chuàng)建一個OSS存儲空間(Bucket)用來存放測試數(shù)據(jù)、測試結(jié)果和測試過程中的日志等。關(guān)于如何創(chuàng)建OSS Bucket,請參見創(chuàng)建存儲空間。

安裝Spark Operator

安裝Spark Operator。

在容器服務(wù)管理控制臺的左側(cè)導(dǎo)航欄,選擇市場>應(yīng)用市場。

在應(yīng)用目錄頁簽,找到并單擊ack-spark-operator。

單擊右上角的一鍵部署。

在彈出面板中選擇目標(biāo)集群,按照頁面提示完成配置。

創(chuàng)建ServiceAccount、Role和Rolebinding。

Spark作業(yè)需要一個ServiceAccount來獲取創(chuàng)建Pod的權(quán)限,因此需要創(chuàng)建ServiceAccount、Role和Rolebinding。YAML示例如下,請根據(jù)需要修改三者的Namespace。

apiVersion: v1 kind: ServiceAccount metadata: name: spark namespace: default --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: namespace: default name: spark-role rules: - apiGroups: [""] resources: ["pods"] verbs: ["*"] - apiGroups: [""] resources: ["services"] verbs: ["*"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: spark-role-binding namespace: default subjects: - kind: ServiceAccount name: spark namespace: default roleRef: kind: Role name: spark-role apiGroup: rbac.authorization.k8s.io

構(gòu)建Spark作業(yè)鏡像

您需要編譯Spark作業(yè)的JAR包,使用Dockerfile打包鏡像。

以阿里云容器服務(wù)的Spark基礎(chǔ)鏡像為例,設(shè)置Dockerfile內(nèi)容如下:

FROM registry.aliyuncs.com/acs/spark:ack-2.4.5-latest

RUN mkdir -p /opt/spark/jars

# 如果需要使用OSS(讀取OSS數(shù)據(jù)或者離線Event到OSS),可以添加以下JAR包到鏡像中

ADD https://repo1.maven.org/maven2/com/aliyun/odps/hadoop-fs-oss/3.3.8-public/hadoop-fs-oss-3.3.8-public.jar $SPARK_HOME/jars

ADD https://repo1.maven.org/maven2/com/aliyun/oss/aliyun-sdk-oss/3.8.1/aliyun-sdk-oss-3.8.1.jar $SPARK_HOME/jars

ADD https://repo1.maven.org/maven2/org/aspectj/aspectjweaver/1.9.5/aspectjweaver-1.9.5.jar $SPARK_HOME/jars

ADD https://repo1.maven.org/maven2/org/jdom/jdom/1.1.3/jdom-1.1.3.jar $SPARK_HOME/jars

COPY SparkExampleScala-assembly-0.1.jar /opt/spark/jarsSpark鏡像如果較大,則拉取需要較長時間,您可以通過ImageCache加速鏡像拉取。更多信息,請參見管理ImageCache和使用ImageCache加速創(chuàng)建Pod。

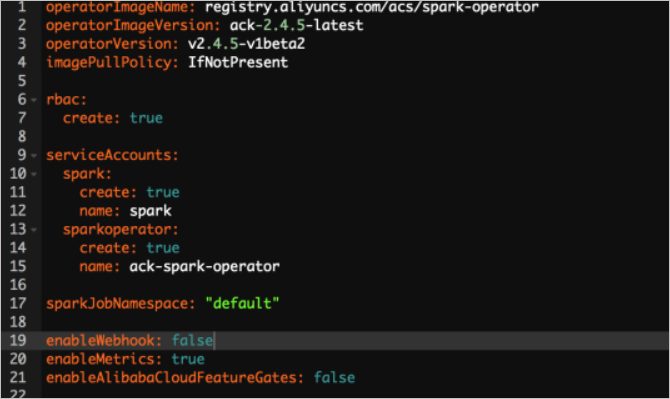

您也使用阿里云Spark基礎(chǔ)鏡像。阿里云提供了Spark2.4.5的基礎(chǔ)鏡像,針對Kubernetes場景(調(diào)度、彈性)進行了優(yōu)化,能夠極大提升調(diào)度速度和啟動速度。您可以通過設(shè)置Helm Chart的變量enableAlibabaCloudFeatureGates: true的方式開啟,如果想要達到更快的啟動速度,可以設(shè)置enableWebhook: false。

編寫作業(yè)模板并提交作業(yè)

創(chuàng)建一個Spark作業(yè)的YMAL配置文件,并進行部署。

創(chuàng)建spark-pi.yaml文件。

一個典型的作業(yè)模板示例如下。更多信息,請參見spark-on-k8s-operator。

apiVersion: "sparkoperator.k8s.io/v1beta2" kind: SparkApplication metadata: name: spark-pi namespace: default spec: type: Scala mode: cluster image: "registry.aliyuncs.com/acs/spark:ack-2.4.5-latest" imagePullPolicy: Always mainClass: org.apache.spark.examples.SparkPi mainApplicationFile: "local:///opt/spark/examples/jars/spark-examples_2.11-2.4.5.jar" sparkVersion: "2.4.5" restartPolicy: type: Never driver: cores: 2 coreLimit: "2" memory: "3g" memoryOverhead: "1g" labels: version: 2.4.5 serviceAccount: spark annotations: k8s.aliyun.com/eci-kube-proxy-enabled: 'true' k8s.aliyun.com/eci-auto-imc: "true" tolerations: - key: "virtual-kubelet.io/provider" operator: "Exists" executor: cores: 2 instances: 1 memory: "3g" memoryOverhead: "1g" labels: version: 2.4.5 annotations: k8s.aliyun.com/eci-kube-proxy-enabled: 'true' k8s.aliyun.com/eci-auto-imc: "true" tolerations: - key: "virtual-kubelet.io/provider" operator: "Exists"部署一個Spark計算任務(wù)。

kubectl apply -f spark-pi.yaml

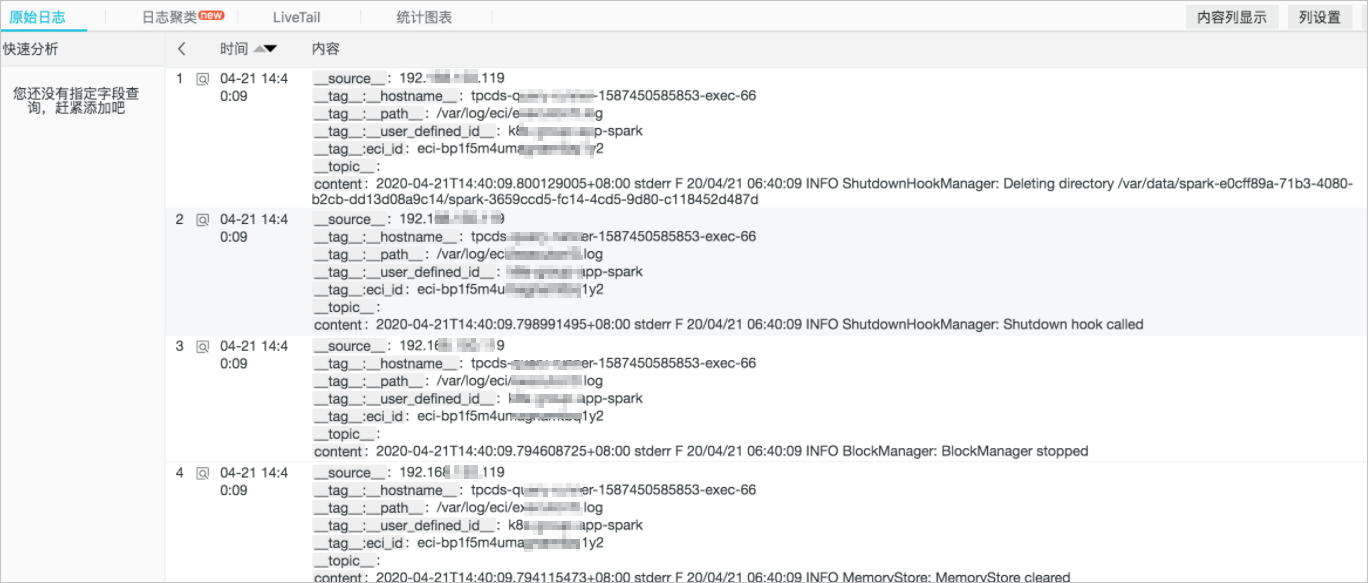

配置日志采集

以采集Spark的標(biāo)準輸出日志為例,您可以在Spark driver和Spark executor的envVars字段中注入環(huán)境變量,實現(xiàn)日志的自動采集。更多信息,請參見自定義配置ECI日志采集。

envVars:

aliyun_logs_test-stdout_project: test-k8s-spark

aliyun_logs_test-stdout_machinegroup: k8s-group-app-spark

aliyun_logs_test-stdout: stdout提交作業(yè)時,您可以按上述方式設(shè)置driver和executor的環(huán)境變量,即可實現(xiàn)日志的自動采集。

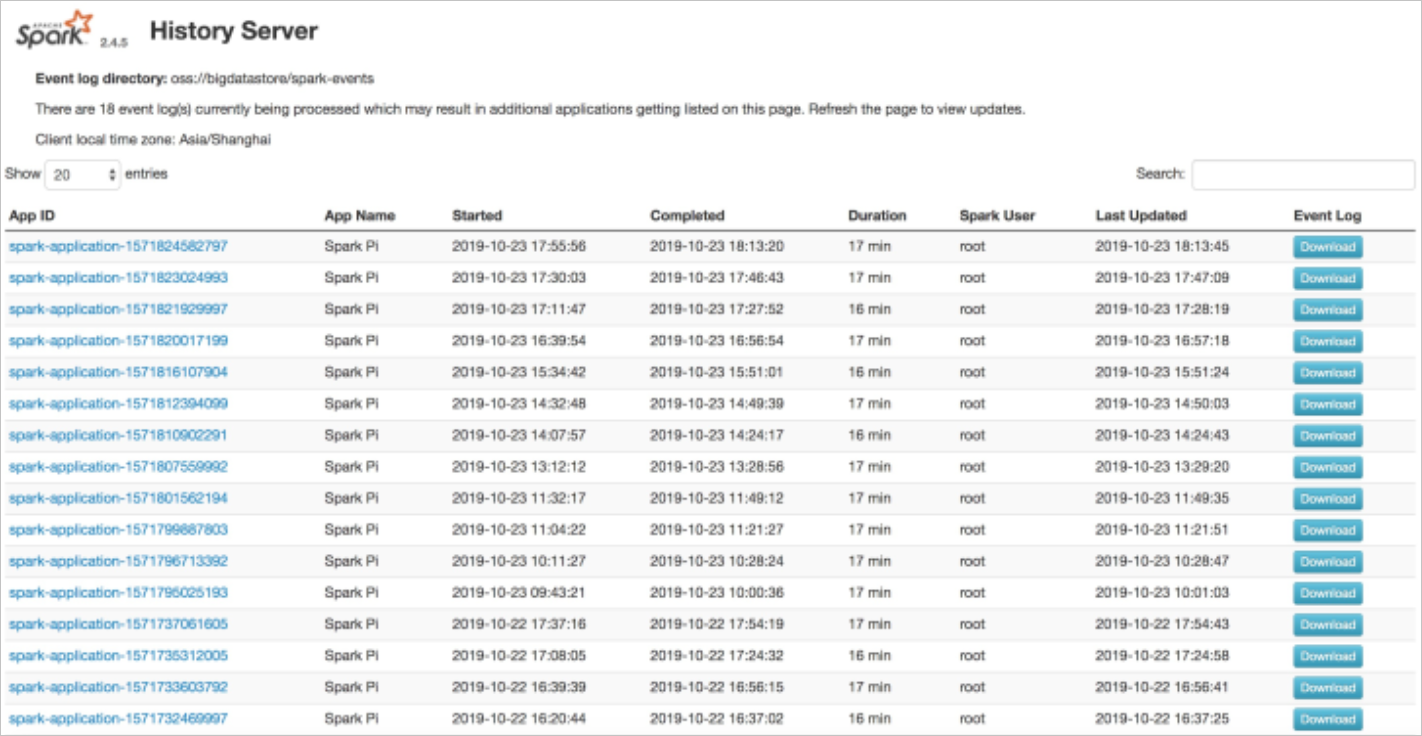

配置歷史服務(wù)器

歷史服務(wù)器用于審計Spark作業(yè),您可以通過在Spark Applicaiton的CRD中增加SparkConf字段的方式,將event寫入到OSS,再通過歷史服務(wù)器讀取OSS的方式進行展現(xiàn)。配置示例如下:

sparkConf:

"spark.eventLog.enabled": "true"

"spark.eventLog.dir": "oss://bigdatastore/spark-events"

"spark.hadoop.fs.oss.impl": "org.apache.hadoop.fs.aliyun.oss.AliyunOSSFileSystem"

# oss bucket endpoint such as oss-cn-beijing.aliyuncs.com

"spark.hadoop.fs.oss.endpoint": "oss-cn-beijing.aliyuncs.com"

"spark.hadoop.fs.oss.accessKeySecret": ""

"spark.hadoop.fs.oss.accessKeyId": ""

阿里云也提供了spark-history-server的Chart,您可以在容器服務(wù)管理控制臺的市場>應(yīng)用市場頁面,搜索ack-spark-history-server進行安裝。安裝時需在參數(shù)中配置OSS的相關(guān)信息,示例如下:

oss:

enableOSS: true

# Please input your accessKeyId

alibabaCloudAccessKeyId: ""

# Please input your accessKeySecret

alibabaCloudAccessKeySecret: ""

# oss bucket endpoint such as oss-cn-beijing.aliyuncs.com

alibabaCloudOSSEndpoint: "oss-cn-beijing.aliyuncs.com"

# oss file path such as oss://bucket-name/path

eventsDir: "oss://bigdatastore/spark-events"安裝完成后,您可以在集群詳情頁面的服務(wù)中看到ack-spark-history-server的對外地址,訪問對外地址即可查看歷史任務(wù)歸檔。

查看作業(yè)結(jié)果

查看Pod的執(zhí)行情況。

kubectl get pods預(yù)期返回結(jié)果:

NAME READY STATUS RESTARTS AGE spark-pi-1547981232122-driver 1/1 Running 0 12s spark-pi-1547981232122-exec-1 1/1 Running 0 3s查看實時Spark UI。

kubectl port-forward spark-pi-1547981232122-driver 4040:4040查看Spark Applicaiton的狀態(tài)。

kubectl describe sparkapplication spark-pi預(yù)期返回結(jié)果:

Name: spark-pi Namespace: default Labels: <none> Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"sparkoperator.k8s.io/v1alpha1","kind":"SparkApplication","metadata":{"annotations":{},"name":"spark-pi","namespace":"default"...} API Version: sparkoperator.k8s.io/v1alpha1 Kind: SparkApplication Metadata: Creation Timestamp: 2019-01-20T10:47:08Z Generation: 1 Resource Version: 4923532 Self Link: /apis/sparkoperator.k8s.io/v1alpha1/namespaces/default/sparkapplications/spark-pi UID: bbe7445c-1ca0-11e9-9ad4-062fd7c19a7b Spec: Deps: Driver: Core Limit: 200m Cores: 0.1 Labels: Version: 2.4.0 Memory: 512m Service Account: spark Volume Mounts: Mount Path: /tmp Name: test-volume Executor: Cores: 1 Instances: 1 Labels: Version: 2.4.0 Memory: 512m Volume Mounts: Mount Path: /tmp Name: test-volume Image: gcr.io/spark-operator/spark:v2.4.0 Image Pull Policy: Always Main Application File: local:///opt/spark/examples/jars/spark-examples_2.11-2.4.0.jar Main Class: org.apache.spark.examples.SparkPi Mode: cluster Restart Policy: Type: Never Type: Scala Volumes: Host Path: Path: /tmp Type: Directory Name: test-volume Status: Application State: Error Message: State: COMPLETED Driver Info: Pod Name: spark-pi-driver Web UI Port: 31182 Web UI Service Name: spark-pi-ui-svc Execution Attempts: 1 Executor State: Spark - Pi - 1547981232122 - Exec - 1: COMPLETED Last Submission Attempt Time: 2019-01-20T10:47:14Z Spark Application Id: spark-application-1547981285779 Submission Attempts: 1 Termination Time: 2019-01-20T10:48:56Z Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SparkApplicationAdded 55m spark-operator SparkApplication spark-pi was added, Enqueuing it for submission Normal SparkApplicationSubmitted 55m spark-operator SparkApplication spark-pi was submitted successfully Normal SparkDriverPending 55m (x2 over 55m) spark-operator Driver spark-pi-driver is pending Normal SparkExecutorPending 54m (x3 over 54m) spark-operator Executor spark-pi-1547981232122-exec-1 is pending Normal SparkExecutorRunning 53m (x4 over 54m) spark-operator Executor spark-pi-1547981232122-exec-1 is running Normal SparkDriverRunning 53m (x12 over 55m) spark-operator Driver spark-pi-driver is running Normal SparkExecutorCompleted 53m (x2 over 53m) spark-operator Executor spark-pi-1547981232122-exec-1 completed查看日志獲取結(jié)果。

NAME READY STATUS RESTARTS AGE spark-pi-1547981232122-driver 0/1 Completed 0 1m當(dāng)Spark Applicaiton的狀態(tài)為Succeed或者Spark driver對應(yīng)的Pod狀態(tài)為Completed時,可以查看日志獲取結(jié)果。

kubectl logs spark-pi-1547981232122-driver Pi is roughly 3.152155760778804